Computer Industry

By George Gilder

If economic growth feeds on knowledge and innovation, current advances stem largely from the computer industry, a force of innovation devoted chiefly to the generation and use of knowledge. During the mid-eighties, studies at the Brookings Institution by Robert Gordon and Martin Baily ascribed some two-thirds of all U.S. manufacturing productivity growth to advances in efficiency in making computers.

The history of the computer revolution is misunderstood by most people. Conventional histories begin with the creation of Charles Babbage’s analytical engine in the mid-nineteenth century and proceed through a long series of other giant mechanical calculating machines, climaxing with ENIAC at the University of Pennsylvania in the years after World War II. This is like beginning a history of space flight with a chronicle of triumphs in the production of wheelbarrows and horse-drawn carriages.

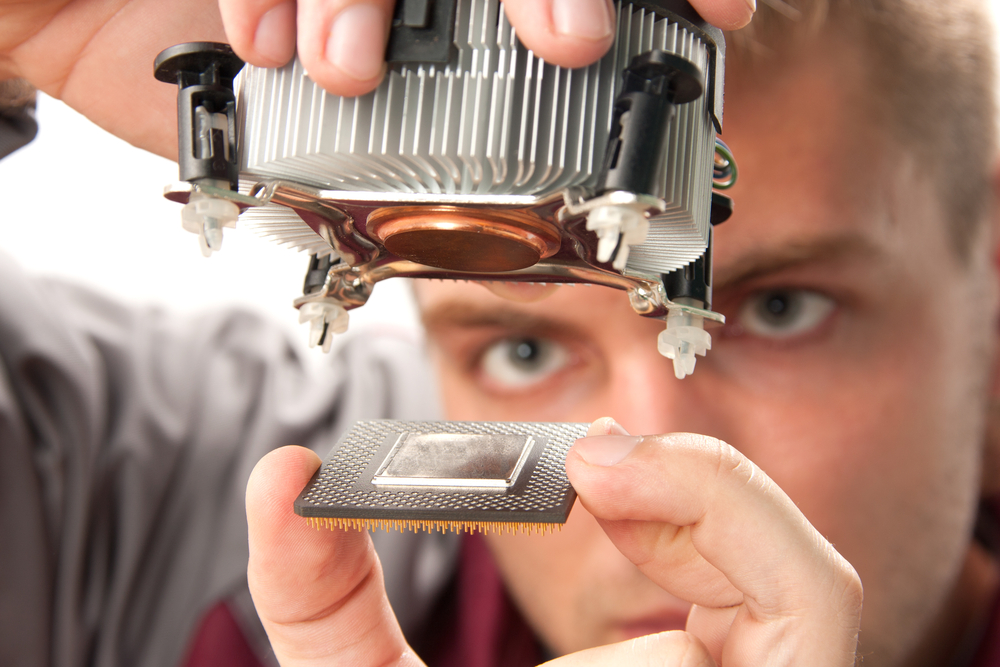

The revolution in information technology sprang not from any extension of Babbage’s insights in computer science, but from the quantum revolution in physical science. Fundamental breakthroughs in solid-state physics led to the 1972 invention of the microchip. The microchip is a computer etched on a tiny sliver of silicon the size of a fingernail, containing scores of functioning logical devices in a space comparable not to the head of a pin, but to the point of a pin. This invention, not the ENIAC, ignited the real computer revolution.

As Robert Noyce, the key inventor of the microchip and father of the computer revolution, wrote in the early seventies:

Today’s microcomputer, at a cost of perhaps $300, has more computing capacity than the first large electronic computer, the ENIAC. It is twenty times faster, has a larger memory, is thousands of times more reliable, consumes the power of a lightbulb rather than that of a locomotive, occupies 1/30,000th the volume and costs 1/10,000 as much.

Since Noyce wrote that, the cost-effectiveness of his invention has risen more than a millionfold in less than two decades.

An effect of entrepreneurial ingenuity and individual creativity, the microchip fueled a siege of innovations that further favored and endowed the values of individual creativity and freedom. Beginning with the computer industry, the impact of the chip reverberated across the entire breadth of the U.S. economy. It galvanized the overall U.S. electronics industry into a force with revenues that, today, exceed the combined revenues of all U.S. automobile, steel, and chemical manufacturers.

The United States dominated the computer industry in 1980, with 80 percent of the industry’s revenues worldwide. Most of these revenues were produced by less than ten companies, with IBM as the leader. All of these firms, including IBM, however, lost ground during the ensuing decade, despite the facts that the computer industry grew three times in size and its cost-effectiveness improved some ten-thousand-fold.

This story carries profound lessons. Imagine that someone had told you in 1980 that even though the computer industry verged on extraordinary growth, all of the leading U.S. firms would suffer drastic losses of market share during the decade, and some would virtually leave the business. Would you have predicted that in 1990 U.S. companies would still command over 60 percent of world computer revenue? Probably not. Yet this is what happened. Despite other countries’ lavish government programs designed to overtake the United States in computing, the U.S. industry held a majority of market share and increased its edge in revenues. The absolute U.S. lead over the rest of the world in revenues from computers and peripherals rose some 40 percent, from $35 billion in 1979 to $49 billion in 1989, while the U.S. lead in software revenues rose by a factor of 2.5. These numbers are not adjusted for inflation, but because prices in the computer industry dropped throughout this period, the unadjusted statistics understate the actual U.S. lead in real output.

What had happened was an entrepreneurial explosion, with the emergence of some fourteen thousand new software firms. These companies were the catalyst. The United States also generated hundreds of new computer hardware and microchip manufacturers, and they too contributed to the upsurge of the eighties. But software was decisive. Giving dominance to the United States were thousands of young people turning to the personal computer with all the energy and ingenuity that a previous generation had invested in its Model T automobiles.

Bill Gates of Microsoft, a high school hacker and Harvard dropout, wrote the BASIC language for the PC and ten years later was the world’s only self-made thirty-five-year-old billionaire. Scores of others followed in his wake, with major software packages and substantial fortunes, which—like Gates’—were nearly all reinvested in their businesses.

During the eighties the number of software engineers increased about 28 percent per year, year after year. The new software firms converted the computer from the tool of data-processing professionals—hovering over huge, air-conditioned mainframes—into a highly portable, relatively inexpensive appliance that anyone could learn to use. Between 1977 and 1987 the percentage of the world’s computer power commanded by large centralized computer systems with “dumb” terminals attached dropped from nearly 100 percent to under 1 percent. By 1990 there were over 50 million personal computers in the United States alone; per capita the United States has more than three times as much computer power as Japan.

In contrast to the American approach to the computer industry, European governments have launched a series of national industrial policies, led by national “champion” firms imitating a spurious vision of IBM. These firms mostly pursued memory microchips and mainframe systems as the key to the future. Their only modest successes came from buying up American firms in trouble. Following similar policies, the Japanese performed only marginally better until the late eighties, when they began producing laptop computers. By 1990 the Japanese had won a mere 4 percent of the American computer market.

Meanwhile, American entrepreneurs have launched a whole series of new computer industries, from graphics supercomputers and desktop workstations to transaction processors and script entry systems—all accompanied with new software. The latest U.S. innovation is an array of parallel supercomputers that use scores or even thousands of processors in tandem. Thinking that the game was supercomputers based on between two and eight processors, the Japanese mostly caught up in that field, but still find themselves in the wake of entrepreneurs who constantly change the rules.

Perhaps the key figure in the high-technology revolution of the eighties was a professor at the California Institute of Technology named Carver Mead. In the sixties he foresaw that he and his students would be able to build computer chips fabulously more dense and complex than experts at the time believed possible, or than anyone at the time could design by hand. Therefore, he set out to create programs to computerize chip design. Successfully developing a number of revolutionary design techniques, he taught them to hundreds of students, who, in turn, began teaching them to thousands on other campuses and bringing them into the industry at large.

When Mead began his chip design projects, only a few large computer and microchip firms were capable of designing or manufacturing complex new chips. By the end of the eighties, largely as a result of Mead’s and his students’ work, any trained person with a workstation computer costing only twenty thousand dollars could not only design a major new chip but also make prototypes on his desktop.

Just as digital desktop publishing programs led to the creation of some ten thousand new publishing companies, so desktop publishing of chip designs and prototypes unleashed tremendous entrepreneurial creativity in the microchip business. In just five years after this equipment came on line in the middle of the decade, the number of new chip designs produced in the United States rose from just under 10,000 a year to well over 100,000.

The nineties are seeing a dramatic acceleration of the progress first sown by the likes of Carver Mead. The number of transistors on a single sliver of silicon is likely to rise from about 20 million in the early nineties to over 1 billion by the year 2001. A billion-transistor chip might hold the central processing units of sixteen Cray YMP supercomputers. Among the most powerful computers on the market today, these Crays currently sell for some $20 million. Based on the current rate of progress, the “sixteen-Cray” chip might be manufactured for under a hundred dollars soon after the year 2000, bringing perhaps a millionfold rise in the cost-effectiveness of computing hardware.

Just as the personal computer transformed the business systems of the seventies the small computers of the nineties will transform the electronics of broadcasting. Just as a few thousand mainframe computers were linked to hundreds of thousands of dumb terminals, today just over fourteen hundred television stations supply millions of dumb terminals known as television sets.

Many experts believe that the Japanese made the right decision ten years ago when they launched a multibillion-dollar program to develop “high-definition television.” HDTV does represent a significant advance; the new sets have a much higher resolution, larger screens, and other features such as windowing several programs at once. But all these gains will be dwarfed by the millionfold advance in the coming technology of the telecomputer: the personal computer upgraded with supercomputer powers for the processing of full-motion video.

Unlike HDTV, which is mostly an analog system using wave forms specialized for the single purpose of TV broadcast and display, the telecomputer is a fully digital technology. It creates, processes, stores, and transmits information in the nondegradable form of numbers, expressed in bits and bytes. This means the telecomputer will benefit from the same learning curve of steadily increasing powers as the microchip, with its billion-transistor potential, and the office computer with its ever-proliferating software.

The telecomputer is not only a receiver like a TV, but also a processor of video images, capable of windowing, zooming, storing, editing, and replaying. Furthermore, the telecomputer can originate and transmit video images that will be just as high-quality and much cheaper than those the current television and film industries can provide.

This difference replaces perhaps a hundred one-way TV channels with as many channels as there are computers attached to the network: millions of potential two-way channels around the world. With every desktop a possible broadcasting station, thousands of U.S. firms are already pursuing the potential market of a video system as universal and simple to use as the telephone is today.

Imagine a world in which you can dial up any theater, church, concert, film, college classroom, local sport event, or library anywhere and almost instantly receive the program in full-motion video and possibly interact with it. The result will endow inventors and artists with new powers, fueling a new spiral of innovation sweeping beyond the computer industry itself and transforming all media and culture.

About the Author

George Gilder is chairman of Gilder Publishing LLC and a frequent contributor to Forbes ASAP. He was formerly semiconductors editor of Release 1.0, an industry newsletter. He is also a director of semiconductor and telecommunications equipment companies.

Further Reading

Gilder, George. Microcosm: The Quantum Revolution in Economics and Technology. 1989.

Gilder, George. Life after Television. 1992.

Hillis, W. Daniel. The Connection Machine. 1985.

Malone, Michael. The Big Score: The Billion-Dollar Story of Silicon Valley. 1985.

Queisser, Hans. The Conquest of the Microchip. 1988.

Scientific American, September 1977. “Microelectronics” issue, including articles by Robert Noyce, Carver Mead, and others.

Related Links

Entrepreneurship

Eric Raymond on Hacking, Open Source, and The Cathedral and the Bazaar, an EconTalk podcast, January 19, 2009.

Kevin Kelly on the Future of the Web and Everything Else, an EconTalk podcast, March 26, 2007.

Robin Hanson on the Technological Singularity, an EconTalk podcast, January 3, 2011.

Arnold Kling, The Prophet of Google’s Doom, a review of Life After Google at Econlib, September 3, 2018.

Arnold Kling, Economics When Value is Intangible, a review of Capitalism Without Capital at Econlib, January 1, 2018.