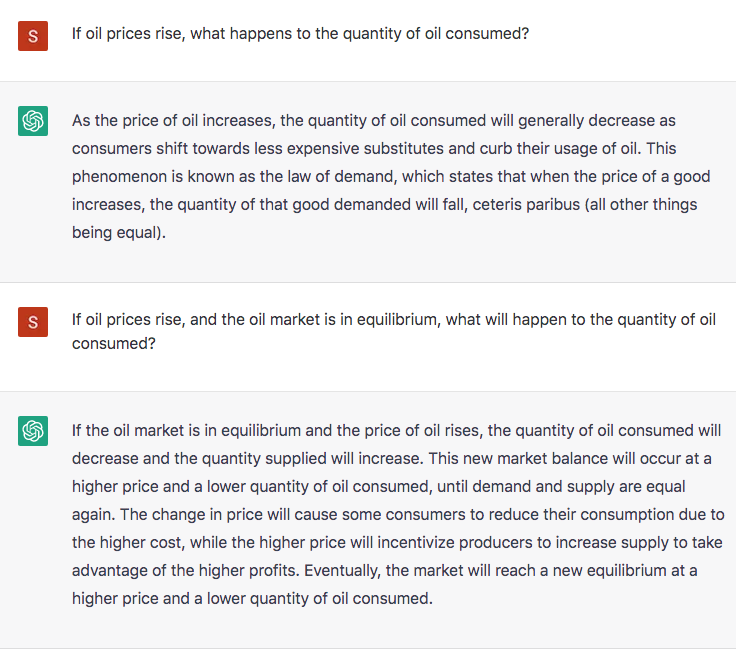

When I see examples of ChatGPT in action, I am reminded of the answers that college students provide on test questions. Yesterday, I finally got around to asking my first Chat question. I decided to test the famous AI with a question that students usually get wrong. Chat got the question wrong. (And no, the “ceteris paribus” qualifier doesn’t help, at all.) I then did a follow-up in the hope that clarifying the question would nudge Chat in the right direction.

As you can see, on the second question Chat is hopelessly confused (if you’ll excuse me for anthropomorphizing a machine.). Chat has some ideas about price and quantity demanded, price and quantity supplied, and the concept of equilibrium, but doesn’t know how to put them together in a coherent fashion. In other words, Chat is a B student in a college economics course.

This post is not about ChatGPT. This new technology is actually quite impressive, and it seems likely that future versions will be even more impressive. Rather this post is about the state of economics.

Suppose I claimed that “reasoning from a price change” is very widespread in the field of economics. How could I justify this claim to a doubter? I suppose I could dig up an example of reasoning from a price change in a news article, or even an academic journal. But that would represent merely a single anecdote, not proof of a widespread problem.

ChatGPT formulates answers by searching over a vast field of economics documents. Thus its answers in some sense represent the consensus view of people who write books and articles on the subject of economics. I’ve come to believe that most people don’t actually understand supply and demand, and the Chat response reinforces this view.

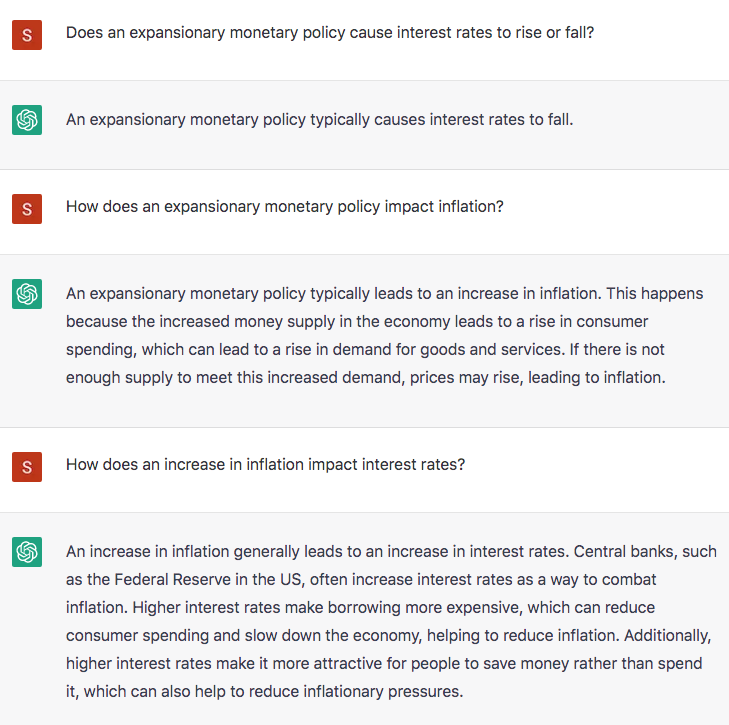

If I am correct, if Chat is like a mirror that reflects both the strengths and weaknesses of our understanding of economics, then I should be able to predict its failures. And I believe I can do so. I followed up my “reasoning from a price change” question with a set of questions aimed at exposing our weak understanding of the relationship between monetary policy and interest rates:

See how easy it is to trick ChatGPT? After 35 years of teaching thousands of students, I can predict how a typical student would answer the three questions above. I know that their answers will not be entirely consistent. And it does no good to claim there’s a grain of truth in each answer. There is. But ChatGPT doesn’t just give yes and no answers; it tries to explain the various possibilities. Is this string of answers likely to be helpful to a college student? Or would it further confuse them? If Chat actually “understood” this stuff, it would give an answer pointing to the complexity of this issue.

Again, this post is not about AI. Others have documented the fact that Chat often gives inadequate answers. That’s not what interests me. Rather I see Chat as a useful tool for diagnosing weaknesses in the field of economics. It allows us to peer into the collective brain of society.

The questions that Chat gets wrong are the questions that most of our students get wrong. Indeed, even many economists are too quick to equate falling interest rates with an expansionary monetary policy. ChatGPT points to the areas where our educational system needs to do better.

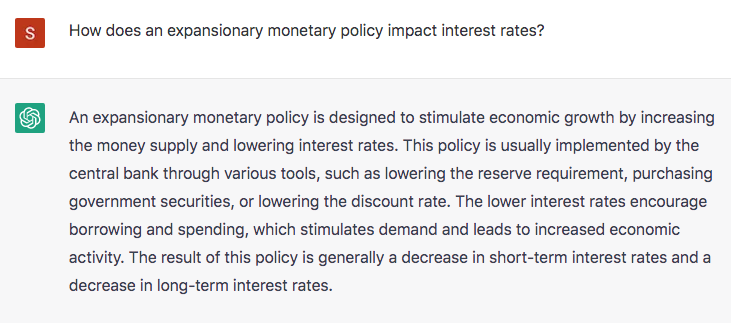

PS. You might argue that in my first monetary question I forced Chat to pick one direction of change. But when the question is more open-ended, the answer is arguably even worse:

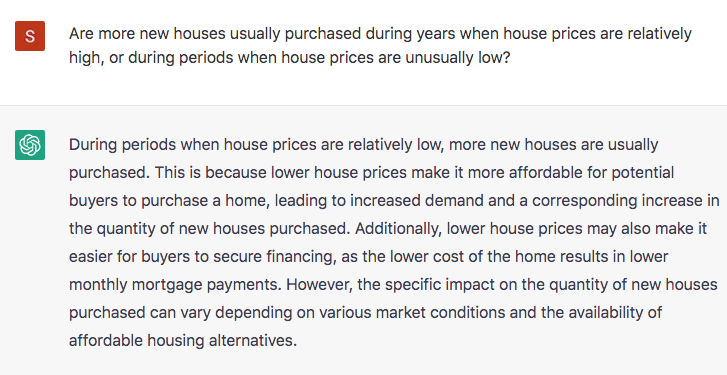

PPS. In the oil market example, one could argue that Chat is reacting to the fact that the oil market is dominated by supply shocks, making the claim “usually true”. But it gives the same answer when confronted with a market dominated by demand shocks, where the claim is usually false:

This gives us insight into how Chat thinks. It does not search the time series data (as I would), looking for whether more new houses are sold during periods of high or low prices, rather it relies on theory (albeit a false understanding of the supply and demand theory.)

READER COMMENTS

Richard W Fulmer

Feb 5 2023 at 4:33pm

If the price of gasoline goes up – either because of increased demand or because of a reduction in supply – the higher prices will tend to discourage consumption and encourage production. Yes, in the former case, demand is currently high, but, going forward, rising prices will tend to reduce demand.

Your second question is confusing. Is supply and demand at equilibrium and then prices rose? Or did prices rise and then supply and demand reached equilibrium sometime after the rise? If the former, then Chat GPT is correct, demand will fall. If the latter, then demand will stay constant.

Your second question about housing sales is clearer – increased demand and high sales are synonymous. Going forward however, the higher prices will tend to discourage demand and encourage production. So, Chat GPT was wrong not to ask the cause of the high prices, but, directionally, it’s correct.

What is your disagreement with Chat GPT’s reply to your open-ended question about the effect of an expansionary monetary policy on interest rates? Isn’t that the standard argument for an expansionary monetary policy? If so, I would expect Chat GPT to provide the “consensus” response.

And Chat GPT is correct that the central bank will (eventually) try to cool inflation by (among other things) raising the interest rates it controls.

Scott Sumner

Feb 5 2023 at 5:35pm

“Going forward however, the higher prices will tend to discourage demand and encourage production.”

That’s wrong. Prices don’t affect demand at all. But let’s say you meant “consumption”, not demand. Your claim would still be wrong. In equilibrium it’s impossible for consumption to fall as production rises. And I told Chat to assume the market was in equilibrium.

In the second example, Chat says consumption rises and production falls, which is impossible for a market in equilibrium. Classic example of reasoning from a price change.

The effect of monetary policy on interest rates is ambiguous, especially long-term interest rates. Chat should have said that.

You said:

“And Chat GPT is correct that the central bank will (eventually) try to cool inflation by (among other things) raising the interest rates it controls.”

Inflation causes higher interest rates for reasons that have nothing to do with central bank policy—it reflects the Fisher effect. A future tight money policy is NOT the reason that higher inflation leads to higher interest rates.

Richard W Fulmer

Feb 6 2023 at 7:24am

Yes, I meant quality demanded not demand, and that should have been clear from the context since in the previous sentence I explicitly referred to consumption.

I agree that at equilibrium, consumption (quantity demanded) would not change. My point was that your question to Chat GPT was ambiguous at best and misleading at worst. If the market is now at equilibrium, the fact that prices rose (or fell) before the market reached equilibrium is irrelevant to your question. Either way, consumption wouldn’t change.

My point about the impact of an increase in the money supply on interest rates was that Chat GPT was responding with standard Keynesian boilerplate, which is what you’d expect given the prominence of Keynesian thinking in the economics profession.

I suspect that Keynesians are right in the short term and wrong in the long term. Initially, banks would likely lower interest rates in response to an increase in the money supply. However, if the increase is sustained, once it becomes clear that price inflation has set in, banks will raise interest rates since they don’t want to loan out $100 and get $90 in purchasing power in return.

Scott Sumner

Feb 6 2023 at 1:29pm

I am not saying that consumption would not change; it probably would change. I’m saying that knowing that the price has increased (or decreased) doesn’t give us any insight into the direction in which consumption would change.

Most intelligent Keynesians (i.e. New Keynesians) understand that “standard Keynesian boilerplate” has been discredited, at least if you are referring to Keynes’s views on money and interest rates. Keynes failed to acknowledge the importance of the Fisher effect. It’s 2023, ChatGPT should not be relying on a book written in 1936.

Brent Buckner

Feb 7 2023 at 7:59am

However, ChatGPT will still see more recent discussions of “demand destruction” (especially with respect to energy/oil).

Kenneth Duda

Feb 5 2023 at 9:12pm

Richard, I hope you take the time to think carefully about Scott’s post and response. I’ve found Scott’s writing over the years to be immensely clarifying, and I now cringe almost every time an economics-related topic comes up on the news. Their explanations are always riddled with sloppy thinking like “higher prices reduce demand”, or junk conventional wisdom like “higher interest rates cool the economy by making it more expensive to borrow money and finance new purchases.”

-Ken

Kenneth Duda

Feb 5 2023 at 9:15pm

To be a little more concrete, consider Scott’s question:

> If oil prices rise, what happens to the quantity of oil consumed?

The correct answer is: could be anything. If prices are rising because of a negative supply shock, then quantity consumed will decrease. If prices are rising because of a positive demand shock, then quantity consumed will increase. If prices are rising because of a general rise in the price level (inflation), then quantity consumed will not change.

Richard W Fulmer

Feb 6 2023 at 7:31am

If consumption continues to rise in the face of rising prices, then prices and consumption will continue rising forever. I think, instead, that increasing prices will lead to less quantity demanded – perhaps not immediately, but eventually.

I would agree that gas consumption wouldn’t be affected by inflation-driven price hikes if prices and wages rose uniformly. However, even under inflation some prices rise, some fall, and some remain the same. If gas prices are rising, then they’re probably rising relative to other prices (and wages), and consumption will tend to fall.

Arnold S Kling

Feb 5 2023 at 5:09pm

Great example, Scott.

I think this illustrates that the most “obvious” use case for ChatGPT, as a sort of Oracle or answer-machine, is one of the most difficult to engineer. Its fluidity with grammar and sentence structure is way ahead of its “knowledge.”

It also illustrates how poorly economics is taught. Teachers are so eager to get onto “advanced” topics like behavioral economics and market failure that the basic idea that prices and quantities are endogenous variables is not drilled in. Most of what is written about supply and demand that is the source material for this sort of AI puts the words “price” and “demand” together in ways that don’t reflect the strict meaning of the terms. The large language model just copies what it sees, having no ability to use reason to conclude differently.

That said, I am very optimistic about other use cases that don’t require exact substantive knowledge. Simulation exercises, such as “explain this to a ten-year-old,” are more likely to prove more useful.

Scott Sumner

Feb 6 2023 at 1:32pm

Good points. This exercise also reassures me that we are still a considerable period away from any sort of existential alignment crisis.

Ken P

Feb 5 2023 at 6:03pm

This is a clever application, Scott. Chat tends to spit out prevailing wisdom on the topic so you can mine it for weaknesses in education or general beliefs of people in the field.

So far, my biggest uses have been 1) within my domain, when I want to doublecheck that I haven’t missed something and 2) slightly tangential to my domain where I still have some technical expertise to catch any mistakes but want insights, key words, or techniques to do lit searches with.

nobody.really

Feb 6 2023 at 10:45am

Clever? It’s genius! Chat can become the ngram of reasoning, the default way to document widespread misperceptions.

Generations from now, scholars will look back to this post as one of those cases where a scholar carelessly offers a profound, widely applicable proof on the path to making some arcane point.

Scott Sumner

Feb 6 2023 at 1:33pm

“ngram of reasoning”

Very well put.

Andy Weintraub

Feb 5 2023 at 8:59pm

“In other words, Chat is a B student in a college economics course.”

Not in my courses. A C at best.

Scott Sumner

Feb 6 2023 at 1:19pm

I taught at Bentley University. Where do you teach?

Knut P. Heen

Feb 6 2023 at 5:53am

F is the only suitable grade for anyone who claims that there is a new market equilibrium in which supply is larger than demand. The bot obviously does not understand the definition of an equilibrium.

The question was not precise though. The only way the price can rise if the market already is in equilibrium is through price controls, and in that case there will be excess supply.

Michael Sandifer

Feb 6 2023 at 9:21am

I’ve been experimenting with ChatGPT for about a month or so, and I’ve discovered some things that might be considered interesting:

I once gave it a short list of monthly S&P 500 data and asked it to change it to quarterly data. It was able to do that, but it copied one of the numbers incorrectly. That’s a seemingly very human mistake, and I’m guessing it may have made the mistake for the same reason humans do. That is, shifts in strengths in mutual associations in neural networks can produce “false memories”.

ChatGPT is not very useful for automating data cleaning. In addition to the above issue, it has surprising limits how how it can transform columns, rows, etc. even in simple data matching tasks,

Asking it questions with deeply ambiguous answers can slow it down greatly, or crash it altogether.

ChatGPT couldn’t tell me why it made the mistake in #1, not surprisingly.

It is good at many things, however. It’s surprisingly good at writing generic resumes, offering suggestions for recipes and wine/beer pairing, and offering computer code or debugging code, though it makes mistakes. It’s only coded one entire macro for me that didn’t need a correction, out of several.

ChrisinVa

Feb 6 2023 at 9:57am

Scott, as a non-economist with a professional degree in a technical field, I know enough to realize that economic news is rarely reported correctly. However, I don’t have the time or inclination to take courses in economics to be able to answer your questions accurately either.

What are your recommendations for books to get the fundamentals of economics (and primarily macro economics) without going back to school? Hopefully something written for relative neophytes. I read your book “The Money Illusion” (twice actually), but I struggled through some of it, in part because your formulas were missing in the Kindle version, and partly because some of the concepts were completely new to me. I also regularly check your blog, but again much of it is written from an expert to people who already understand, rather than from a teacher to students.

For example, you talk a lot about AS/AD. Where can I get a good explanation for a simple AS/AD curve? Something to explain what it means, how it works, and what happens if each of the variables is adjusted at the fundamental level.

Scott Sumner

Feb 6 2023 at 1:22pm

Used copies are reasonably priced:

https://www.amazon.com/Economic-Principles-Perspective-Stephen-Rubb/dp/1464182493/ref=tmm_hrd_swatch_0?_encoding=UTF8&qid=&sr=

Spencer

Feb 6 2023 at 10:17am

Supply and demand?

Real Estate Loans, All Commercial Banks (REALLN)

Real Estate Loans, All Commercial Banks (REALLN) | FRED | St. Louis Fed (stlouisfed.org)

Dale Doback

Feb 6 2023 at 1:00pm

Great use of this technology. After some cajoling, I did eventually get the bot to answer your original question as “A change in oil prices does not provide insight into the change in the quantity of oil consumed”

Scott Sumner

Feb 6 2023 at 1:23pm

Interesting. I guess you just need to nudge it to search in the right direction.

Tristan

Feb 6 2023 at 1:45pm

I’m not sure if you’ve seen it already, but Larry White and Frederic Mishkin just had an interesting debate on ending the Fed. Would love to hear your thoughts on it. Especially on the efficiency of clearing houses during periods of large increases in the demand for gold (which is where I thought Mishkin won the debate).

Scott Sumner

Feb 6 2023 at 7:27pm

Perhaps these posts would help:

https://www.econlib.org/what-would-it-mean-to-abolish-the-bank-of-canada/

https://www.econlib.org/back-to-gold/

Jose Pablo

Feb 6 2023 at 4:22pm

Very interesting post, Scott!

I find your basic claim that ChatGPT merely “reflects” widespread beliefs both accurate and worrying.

ChatGPT developers claim that ChatGPT would improve thru “feedback”. But the feedback ChatGPT would be getting, will have, very likely, the same problems that the original information: it will reflect widespread opinions more than “accurate” opinions.

The spreading of this self-reinforcing inconsistencies can be observed in economics and politics and, I believe, is “infecting” also some “not so artificial intelligences” like, for instance, The Economist authors.

Richard W Fulmer

Feb 6 2023 at 5:07pm

One of the nice things about Chat GPT is that it will tell you how it derives its answers, although its explanations aren’t always very satisfying:

If I asked you how an expansionary monetary policy would impact interest rates would you derive an answer from economic principles, or would your answer be based on the consensus view of books and articles?

If I asked you whether more new houses are usually purchased during years when house prices are relatively high, or during periods when prices are unusually low, would you search time series data and provide an empirical answer, or would you rely on demand theory?

What is the empirical evidence that indicates that housing sales fall when housing prices are high and rise when prices are low?

Scott Sumner

Feb 7 2023 at 12:14pm

Thanks Richard, I don’t think it’s right about its empirical claims. That certainly was not the case during the 2000-2010 boom and bust.

This year, house prices and house purchases are both expected to fall.

I’d like to see the studies that Chat is looking at.

Richard W Fulmer

Feb 7 2023 at 3:08pm

Please cite empirical studies indicating that banks lower interest rates after an increase in the money supply.

Which economists believe that an increase in the money supply can lead to a decrease in interest rates?

Please cite empirical studies indicating that housing sales fall when housing prices are high and rise when prices are low.

ssumner

Feb 8 2023 at 7:38pm

Thanks for checking that out. That’s certainly not an accurate statement of the monetarist view on the relationship between money and interest rates. ChatGPT has a lot of work to do.

Richard W Fulmer

Feb 7 2023 at 4:53pm

I asked Chat GPT a leading question: Does the belief that banks will lower interest rates in response to an increase in the money supply conflict with the Fisher Effect, or are the two ideas simply referring to different stages in an inflationary boom?

Víctor

Feb 9 2023 at 6:36pm

Hi Scott,

ChatGPT doesn’t work as well for general questions as when you make them more specific.

I tried to make the same questions but adding the request that it should answer according to the thought of some economist in specific as Alchian or yourself, from my point of view it does give more accurate answers like that.

Anyway, thanks for your writings I quite enjoy reading them.

AMT

Feb 9 2023 at 9:36pm

Comments are closed.