A recent Vox post asked 17 experts for their views on the risks posed by artificial intelligence. Bryan Caplan was grouped with those who views might be described as more complacent, or perhaps less complacent, depending on whether you define “complacency” as not worried about AI, or as opposed to letting AI transform our society.

AI is no more scary than the human beings behind it, because AI, like domesticated animals, is designed to serve the interests of the creators. AI in North Korean hands is scary in the same way that long-range missiles in North Korean hands are scary. But that’s it. Terminator scenarios where AI turns on mankind are just paranoid. — Bryan Caplan, economics professor, George Mason University

I’m not sure how I should feel about this comment. Let’s start with the first sentence, suggesting that AI is no worse that the humans behind it. So how bad are humans? It turns out that some humans are extremely bad. One terrorist known as the Unabomber thought that the Industrial Revolution was a mistake. He was captured, but might a similar human pop up in the age of AI? Imagine someone who thought humans were a threat to the natural environment.

The second sentence compares AI to long-range [presumably nuclear] missiles. I find those to be sort of scary, but I am much more frightened of nukes being put inside a bale of marijuana and smuggled into New York. How good are we at stopping drugs from coming into the country?

The second sentence does offer one reassurance, that missiles are controlled by governments. Even very bad governments such as the North Korean leadership can be deterred by the threat of retaliation.

But that makes me even more frightened of AI! A future Unabomber who wants to save the world by getting rid of mankind could be (in his own mind) a well-intentioned extremist, willing to sacrifice his life for the animal kingdom. Deterrence won’t stop him.

So what is the plan here? Is use of AI to be restricted to governments only?

Or is it assumed that AI will never, ever develop to the point where it could be used as a WMD?

And how good is the track record of scientists telling us that something isn’t possible? A while back a professor who teaches biotech told me that the cloning of humans is completely impossible—it will never happen—because we are far too complex. Within 2 years a sheep had already been cloned.

David Deutsch is one of my favorite scientists. In 1985 he came up with the idea of quantum computers. But he also doubted that they could be manufactured:

“I OCCASIONALLY go down and look at the experiments being done in the basement of the Clarendon Lab, and it’s incredible.” David Deutsch, of the University of Oxford, is the sort of theoretical physicist who comes up with ideas that shock and confound his experimentalist colleagues–and then seems rather endearingly shocked and confounded by what they are doing. “Last year I saw their ion-trap experiment, where they were experimenting on a single calcium atom,” he says. “The idea of not just accessing but manipulating it, in incredibly subtle ways, is something I totally assumed would never happen. Now they do it routinely.”

Such trapped ions are candidates for the innards of eventual powerful quantum computers. These will be the crowning glory of the quantum theory of computation, a field founded on a 1985 paper by Dr Deutsch.

And here’s something even more amazing. The Economist’s cover story is on the booming field of quantum computing.

And here’s something even more astounding. Humans don’t even know how these machines will work. For instance, Deutsch says:

“If it works, it works in a completely different way that cannot be expressed classically. This is a fundamentally new way of harnessing nature. To me, it’s secondary how fast it is.”

. . . A good-sized one would maintain and manipulate a number of these states that is greater than the number of atoms in the known universe. For that reason, Dr Deutsch has long maintained that a quantum computer would serve as proof positive of universes beyond the known: the “many-worlds interpretation”. This controversial hypothesis suggests that every time an event can have multiple quantum outcomes, all of them occur, each “made real” in its own, separate world.

One theory is that machines that fit on the top of a table will manipulate more states than there are atoms in this universe by accessing zillions of alternative universes that we can’t see, and another theory says this will all be done within our cozy little universe. And scientists have no idea which view is correct! (I’m with Deutsch–many worlds.) Imagine if scientists were not able to explain how your car moved? Or to take a scarier analogy, suppose scientists in 1945 had no idea whether the first atomic bomb would destroy a building, a city, or the entire planet?

Just to be clear, I don’t think quantum computers pose any sort of threat; I’m far too ignorant to make that judgment. What does worry me is that journalists can put together a long list of really smart people who are not worried about AI, and another list of really smart people who see it as an existential threat. That reminds me of economics, where you can find a long list of experts on both sides of raising the minimum wage, or adopting a border tax/subsidy or cutting the budget deficit. I know enough about my field to understand that this divergence reflects the fact that we simply don’t know enough to know who’s right. If that’s equally true of existential threats looming in the field of AI, then I’m very worried.

AI proponents should not worry about convincing ignorant people like me that AI is not a threat. Rather they should focus on convincing Stephen Hawking, Elon Musk, Bill Gates, Nick Bostrom and all the other experts who do think it is a potential threat. I’m not going to be reassured until those guys are reassured.

I may know absolutely nothing about AI, but I know enough about human beings to know what it means for experts in a field to be sharply divided over an issue.

PS. Please don’t take this post as representing opposition to AI. Rather I’m arguing that we should take the threat seriously. How we react to that information is another question—one I’m not competent to answer.

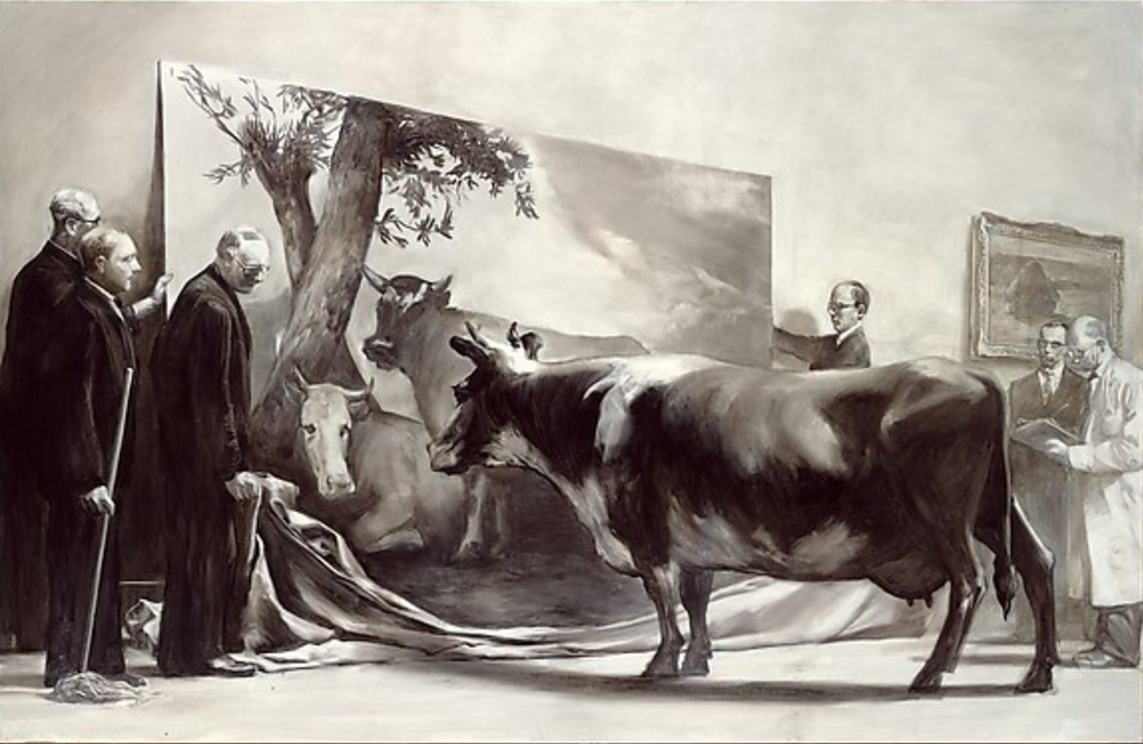

PPS. Commenters wishing to convince me that AI is not a threat should also indicate why your argument is not convincing to people much better informed than me. Otherwise, well . . . it’s sort of like this . . .

HT: Tyler Cowen

READER COMMENTS

Jerry Brown

Mar 14 2017 at 2:38pm

AI is scary to me also. And the Terminator movies were actually very good. As for your atomic bombs- I remember reading somewhere that some of the scientists involved with the first fusion bomb were actually concerned that the fusion explosion might spread throughout the atmosphere. Good thing it didn’t…

Sean

Mar 14 2017 at 2:45pm

AI seems like a blackhole. Its an event horizon that is likely coming. No human is capable of figuring out what happens on the other side.

But the second AI finds some form of human level ability to think it maintains computers ability to process data at speeds we can’t comprehend. Then starts a process of AI creating faster chips/tech while improving AI programming at speeds we can’t comprehend. Once the event horizon is passed we could see technology advance a 1,000 human years in a single year.

I don’t think a rogue actor WMD is the real threat. Its the threat of going from humans being the source of intelligence on earth and 6 months later AI intelligence is to humans how humans are to bacteria.

Daniel Kahn

Mar 14 2017 at 3:20pm

Watch your friend Eliezer Yudkowsky’s lecture on AI alignment and get even more worried:

https://www.youtube.com/watch?v=EUjc1WuyPT8

Maniel

Mar 14 2017 at 3:28pm

When I worked at the Jet Propulsion Lab (Pasadena CA) in 1970, my supervisor explained succinctly, and in my opinion, accurately, what AI was: “programming.” I vote with Professor Caplan.

roystgnr

Mar 14 2017 at 3:52pm

If anyone actually believed this, there would be less danger. We would compromise on a regulation which bans AI researchers from coding as soon as they write a single bug, to which the optimists would agree because, as per their proposition above, nobody smart enough to create AI would ever write code that doesn’t do what they want it to do. They would all be banned shortly, as would their successors. Then, by the time humanity figures out the problem of “how to write software which always does what we design for it to do”, centuries from now, we might at least have a shot at solving the problem of “how to design software that always does what we should want it to do, morally, despite our having failed to agree upon or even precisely articulate our own desires and morality at any point in human history”.

Steve S

Mar 14 2017 at 4:13pm

I tipped towards being more worried about AI after a series of posts by Scott Alexander at SSC. (Search on the homepage for AI, you’ll find them).

The scariest scenario – as I weakly understand it – is that you run some kind of optimization algorithm through an AI, and it eventually leads to outcomes you may not have explicitly told it to not reach.

What if you told it to optimize the electric grid and it somehow determined that the electric grid would be perfectly efficient if nobody was using it? So it connects to nuclear stockpiles and launches them all? It sounds far-fetched but the point is that any open-ended question can lead to unintended outcomes that cannot be avoided unless you explicitly think of each and every single one.

Joshua

Mar 14 2017 at 4:27pm

I think it would be fairer to describe Bryan and those agreeing with him as “less concerned” rather than “more complacent” which seems to take for granted the reality of the AI threat.

Hazel Meade

Mar 14 2017 at 4:30pm

First of all, you have to define what you mean by “AI”.

When many people talk about artificial intelligence, what they really mean is artificial consciousness. Speaking as someone who studied artificial intelligence, cognitive science, and robotics, as part of my doctoral research, we have no idea how consciousness works and are nowhere near duplicating it in a machine. (Also a conscious robot might be really stupid and not a threat to anyone. The first conscious robots are going to be like retarded babies.)

However, if what you mean is “computers that can do really advanced calculations on enormous amounts of data very quickly”, that is something that is going to happen and is already happening in various form. But whether it’s good or bad is almost purely a function of what it’s human creators program it to do.

There are two things to worry about with that kind of AI:

1. Putting an AI in charge of some complex system upon which human lives depend without adequately testing it or incorporating sufficient failsafe mechanism to keep it from accidentally killing someone in the event of some bizarre programming fault. (Or without adequate security so that it can be hacked.)

2. How governments will use it to control their citizens (i.e. NSA surveillance programs). And this is already happening.

Thomas Sewell

Mar 14 2017 at 4:37pm

Steve S,

In computer science, this scenario is routinely solved in security appliances through use of a white list instead of a black list.

In other words, you don’t prevent it from taking any action which is bad you can think of, you prevent it from taking any action which isn’t on a pre-approved list of “safe” actions. You do that BEFORE granting it permissions to manage the electric grid so that you aren’t worrying about random AI (from someone without permissions) with various powers.

Theoretically an AI may be faster and more efficient at taking actions, but it’s actually easily made less autonomous than the humans already managing things like the electric grid.

Worst case, you can assign another set of AIs to secure the first one and ensure it isn’t taking unapproved actions. 🙂

Andrew_FL

Mar 14 2017 at 5:16pm

AI designed by utilitarians will be an extinction level event.

But take heart, there’s a chance it might instead be designed by someone who isn’t a utilitarian.

Mark Bahner

Mar 14 2017 at 5:24pm

Hi Scott,

I wrote these comments based on reading your post without reading the VOX article to which you linked:

1) The AI as-existential-threat will likely come after AI has a significant effect on jobs. For example, I think computer-driven delivery vehicles will obliterate brick-and-mortar retail (Walmart, Costco, Kroger, Walgreens, etc.) This will drastically reduce the need for retail salespeople and cashiers…two of the most common jobs in the U.S.

2) Economists are much better at figuring out the effects of AI on employment and what should be done, compared to whether AI could be an existential threat, and what to do about that.

So I think economists should be focusing–big time!–on the effects of AI on employment, and what to do about that.

I have the following comments on the VOX article:

Sebastian Thrun: Geez, man! Think about how great human beings have been for chimpanzees and gorillas. Or various strains of bacteria. (Those are not ridiculous analogies.)

Steven Pinker: Enslavement isn’t the worst possibility. Total obliteration is the worst possibility. Slaves can get free. Extinct species can’t come back from extinction (without help).

Andrew NG: The existential threat comes at most several decades after jobs threat. So the time frame is much more accelerated than any over-population problem on Mars.

Andrew Krauss: Excellent point. The future is coming. And it’s coming very fast. That’s what happens when something like the number of computations per second per dollar doubles every 1-2 years.

drobviousso

Mar 14 2017 at 5:29pm

@Hazel Meade – Well said

@Scott – you said “Rather they should focus on convincing Stephen Hawking, Elon Musk, Bill Gates, Nick Bostrom and all the other experts”

You tell me the track record of futurists that have published two or more popular-market books, and I’ll tell you why their opinion of existential threats don’t matter. But please don’t call them experts on AI.

Mark Bahner

Mar 14 2017 at 5:32pm

I basically agree with the first statement (without having thought very much about it), and strongly disagree with the second.

Suppose IBM Watson were to become conscious. Would you characterize IBM Watson as “like a retarded baby”? I would characterize it as essentially the most knowledgeable person on earth (who is blind, has no sense of smell or touch, and was never born or grew up).

For example, if you asked a baby to come up with a rhyming question with the answer of: “A long, tiresome speech delivered by a frothy pie topping” the baby would probably answer, “Ack? Gack?” In contrast, Watson correctly answered, “What is a meringue-harangue?” 🙂

Gordon

Mar 14 2017 at 5:33pm

“Rather they should focus on convincing Stephen Hawking, Elon Musk, Bill Gates, Nick Bostrom and all the other experts who do think it is a potential threat. I’m not going to be reassured until those guys are reassured.”

In your list of experts, you have a physicist, a philosopher, and two technologists who have not worked extensively on any AI projects themselves. To me, this is somewhat reminiscent of all the computer experts who were expressing alarm at Y2K. These experts knew far more than the general public. They had a good idea about the amount of code that used date functions and the amount of electronic hardware that had chips that performed date functions.

But none of these experts were people who had extensive experience writing and deploying code that used date functions. The vast majority of them were not worried because they knew that the vast amount of code that used date functions never did a comparison of dates in which the year was simply expressed as 2 digits.

Yet, you didn’t hear from the people with the greatest expertise. You only heard from the people with only a moderate amount of expertise as they were the ones who generated alarming headlines.

I want to hear from people who are truly AI experts working in the field. If they express concern, then I’ll be concerned.

IGYT

Mar 14 2017 at 5:39pm

There was in fact uncertainty about that: whether the fission bomb would start a fusion reaction with atmospheric hydrogen or even sea water. Arthur Compton and Robert Oppenheimer agreed they would shut down the Project if theoretical calculations indicated a probability that “the earth would be vaporized” of greater than three per million. The calculation came in just under.

So at least says Pearl Buck’s, who interviewed Compton in 1959:

http://large.stanford.edu/courses/2015/ph241/chung1/docs/buck.pdf

Mark Bahner

Mar 14 2017 at 6:35pm

I think your professor was very wrong. Ignoring hardware is a huge mistake. The difference between Google Home and the nearest equivalent in 1970 isn’t just “programming.” It’s hardware. Specifically, hardware that can perform enough calculations per second to interpret human speech, and then hardware that quickly accesses the hardware that stores the mind-bogglingly large amount of information on the Internet.

For example, Google Home has 4 gigabytes of RAM, and costs $130. The price of 4 gigabytes of RAM in 1970 would have been approximately $3 billion (with a “b”)! Needless to say, no computer in the world came even close to having 4 gigabytes of RAM in 1970. So that’s a huge part of why no computer in the world came close to understanding natural language in 1970.

Mark Bahner

Mar 14 2017 at 6:53pm

I don’t think it’s that fast. It’s fast, but not that fast. Per Ray Kurzweil’s estimates, $1000 worth of computing power goes from the power of approximately an insect brain in approximately 2000 to approximately a human brain in approximately 2025. So that’s 25 years to go from an insect brain to a human brain. And then approximately 25 more years for $1000 to be able to perform as many calculations per second as all human brains combined.

P.S. Of course, his projections assume that computers don’t take over the task of designing themselves.

Silas Barta

Mar 14 2017 at 7:13pm

I actually think you’re spot on about AI risk, but I want to address the (mostly tangential) point about quantum computing. They are *not* general supercomputers, and are only known to be faster on a narrow set of problems that doesn’t generalize to arbitrary computing — unless “factoring integers” turns out to be the bottleneck.

Deutsch’s claims that quantum computers branch into exponentially many parallel universe and store a universe of data compactly are *particularly* suspect; qubits are known not to (recoverably) store more than a bit of information, and the power of quantum computer depends on the computation paths *not* branching, but interfering with each other.

See quantum computing expert Scott Aaronson’s notes here, in the “Quantum Computing and Many-Worlds” section.

It’s why he puts in the banner of his blog: “Quantum computers would not solve hard search problems instantaneously by simply trying all the possible solutions at once.”

Scott Sumner

Mar 14 2017 at 9:50pm

Silas, I’m not qualified to follow that link, but I do find it interesting that the guy who first published a paper on quantum computing views the many worlds interpretation as the most plausible. I’ve also read Eliezer Yudkowsky’s explanation of why many worlds is the natural interpretation of the theory, and it makes more sense to me than the alternative.

Everyone, lots of interesting points, but nothing to make me change my mind. I’m not at all reassured by the fact that people working in AI are not worried about its risks. That’s what I’d expect, even if there were hidden dangers.

As an aside, I’m more worried about biotech research than AI research.

mbka

Mar 14 2017 at 10:08pm

What I missing in this discussion is an expression of darwinian or evolutionary logic. The things that are truly dangerous are not the ones that can do single actions that are bad (save maybe a single and really bad asteroid impact). The danger comes from autonomous function with replication. Then only do you have rapid proliferation without control, and even then there are still checks and balances by competing entities that do the same (as in life in nature).

An AI that needs feeding with electricity and spare parts by friendly humans, is not autonomous. An AI that builds other programs, but does not fully repair and replicate itself without external help (electricity, spare parts, resources in short), is not a replicator. Also, what Gordon said: it’s ironic that near all discussions on AI involve general-purpose technology celebrities, not actual engineers working on it.

Why is this important? Academics and philosophers always assume that danger MUST come from something that is a) conscious or b) intelligent, or both. Anyone ever had a bacterial infection? Here, the danger came from unintelligent agents, completely dumb and completely unconscious. The danger came from autonomous replicators trying to use resources. You don’t have to be conscious or even have a brain for that. Conversely, a conscious brain that needs constant life support can be unplugged, at least in principle. Imagine the Unabomber in hospital but with access to the internet. That’s AI: potentially dangerous but the means to control it are well known.

So: What really worries me these days is anything biological. Replicating, autonomous agents. Completely orthogonal to intelligence. Examples, CRISPR and gene drive technologies. These act on known autonomous replicators (AKA “life”) that compete for resources with others of the kind (us, our food sources, etc). Imagine a gene drive experiment designed to spread resistance to malaria. Released into the wild it goes wrong. Mosquitoes replicate. They keep infecting humans, rendering them sterile through gene drive. We are VERY close to a “28 days later” type of scenario. This is worrisome, not some silly AI stuff that caters to what academics care for, IQ and consciousness. Me, I care for breathing first, then food, health, then everything else. IQ comes last on my list.

If I am somewhat worried about AI at all, it’s only because I have often been wrong so I have become careful about my own opinions.

mbka

Mar 14 2017 at 10:12pm

Ah Scott, re: biotech, our posts crossed…

Mark Bahner

Mar 14 2017 at 11:07pm

Yes, as I see it, AI that can’t self-replicate is far less dangerous, and AI that can’t move and manipulate things autonomously is far less dangerous.

So I think the real danger only comes when robots can build more robots and can move around and manipulate things. That, to me, is mid-century stuff…which is not so terribly far away. But when it comes, it will be paired with an intelligence that likely will significantly exceed ours, which is what brings the real existential threat.

The reason we rule this planet (sorry bacteria and viruses, it’s true) is because we’re smartest. So pairing bodies approximately as capable as our own with intelligence equal to or superior to our own is a significant existential threat.

Maniel

Mar 14 2017 at 11:51pm

@Mark Bahner

I take your point about advances in computer HW. However, Prof Caplan is more on point than either of us when he says that “AI is no more scary than the human beings behind it.”

ChrisA

Mar 15 2017 at 12:41am

AI is a danger exactly as Scott says not because of some silly SciFi movie trope when Skynet suddenly becoming concious, but because it becomes very powerful tool when used by someone nefarious. The real scary scenario is uploading, the first person to become a super intelligent electronic consciousnesses will want to prevent anyone else from taking that step if only for self protection. If she or he doesn’t sooner or later a psychopath will be uploaded who will use their superpowers against them.

Edan Maor

Mar 15 2017 at 8:34am

@Hazel Meade:

You wrote:

“When many people talk about artificial intelligence, what they really mean is artificial consciousness.”

This statement is pretty much not true of most of the people working on the AI safety problem that I’ve seen. They’ll pretty explicitly say that whether AI becomes conscious, what consciousness even means, is completely a side point to the only thing that matters: will we eventually create some kind of process that can achieve a goal better, more efficiently, etc. than any human. If so, then we need to care *a lot* about which goals we’re giving this optimization process.

The basic argument for worrying about AI safety is incredibly simple, and I haven’t seen anyone refute it even close to convincingly. The only somewhat valid counterarguments I’ve ever seen were variations of “we can control the AI when we build it”. I think that’s wrong, and there’s lots of reasons to think we *won’t* be able to (just for starters, when’s the last time you used a piece of software that didn’t have bugs in it?). Another *potentially* valid argument is that it’s too soon to worry about this problem because AI is so far away, but I think Scott and others are pretty convincing here – we have a pretty terrible track record when it comes to prediction of when a technology is likely.

There’s also the in my view invalid counterargument, of “AI is only worrying based on what people choose to do with it”. It’s true that I’m worried about what humans choose to do with AI, but this is in my mind just a *different* (somewhat related) problem. It’s like saying “No, the *real* problem is that we’ll create a supervirus”. Neither problem is more real than the other – they’re just 2 problems we have to deal with.

The basic argument to “worry” remains valid. Humans are a kind of intelligence. Assuming there’s nothing magic about us, then at some point, we’ll be able to create an artificial intelligence. If we manage to make it a lot better at achieving its goals than humans are, then its goals will be the only ones that matter/make an impact, in the same sense that humans’ goals have more impact than, e.g., monkey’s.

RPLong

Mar 15 2017 at 10:02am

I think Maniel’s first comment is spot-on. I think that people who fear artificial intelligence are guilty of personifying artificial intelligence. The difference between a self-learning algorithm and a sentient being is that the latter has a will to live. There is no reason at present to believe that artificial intelligence will ever develop hunger pangs or sex drives that drive animal behavior. It would require a motivated human programmer to establish that with programming. You could argue that a self-learning algorithm, given enough time, would eventually stumble upon these things if it determined that they were beneficial to its modus operandi, but isn’t it rather vain to assume that human motivations would be the finish line of that kind of process?

Scott Sumner

Mar 15 2017 at 10:16am

mbka, In my view a “generalist” is likely to look further down the road than a specialist. The specialist focuses on all the things we have trouble doing RIGHT NOW, while the generalist looks at where all this may go in a few hundred years.

Miguel Madeira

Mar 15 2017 at 10:56am

“The difference between a self-learning algorithm and a sentient being is that the latter has a will to live.”

Imagine that, by accident, a self-learning algorithm develops a “will to live” (after all, if it is self-learning, it is natural that it will have a kind of “self-mutations”, I think) – in practice, these means a) a tendency to try to block attempts to shut it down; and b) a tendency to make viral copies of itself. And specially the b) will have a kind of darwinian advantage, no? After all, software that make copies of itself will be, soon, the most abundant type of software (I am not much sure about a) – after all, specially in its more primiive form, a computers who tries to prevent humans from shut it down will much probably be shuted down BEFORE a computer who not object to being shuted down).

If this happen, that AI will be very similar to a true sentient being, no?

Mark Nel

Mar 15 2017 at 11:14am

Today, we have a relatively dynamic global economy that looks for signals of consumer wants and needs, and the economy goes about attempting to satisfy those needs. Consumers ultimately rule.

Seems to me, AI would reverse that order. We would become dependent “slaves” to the algorithms, stuck with machine driven solutions. Less choice, less freedom. The thought is scary to me.

Unless of course, someone develops a truly “fickle”, irrational (i.e. human) AI model. Not holding my breath on that one.

RPLong

Mar 15 2017 at 11:14am

Miguel, the way I view your questions is that they rely on ambiguous definitions of words and phrases, and then make use of that ambiguity to lead to a concrete conclusion.

It reminds me of the old free will argument: “Suppose I could build a machine that was exactly like you, programmed it with all your memories and preferences and thoughts, and turned it on. Thus, I have proven that there is no free will.” These arguments aren’t convincing to me because they merely beg the question. It’s like saying, “Suppose I am correct; ergo, I am correct.”

I agree that, subject to a long and contentious list of assumptions that enable AI to be linguistically indistinguishable from biological intelligence, AI will be linguistically indistinguishable from biological intelligence. My point is that just because an algorithm can learn how to write code doesn’t mean that eventually self-learning algorithms will refuse to turn themselves off and attempt to enslave or eradicate mankind.

Mike Sandifer

Mar 15 2017 at 11:28am

Scott,

I wonder if you can articulate your concerns about AI in more specific terms. Are you concerned about potential dangers around AI that can design new AI, augmenting itself or creating totally new AI to reach goals that are increasingly removed from human goals, through a sort of Darwinian process of development?

If so, I don’t share deep concerns about this happening accidentally, because I assume there will be sufficient incentives to make sure either that more efficient/advanced algorithms and/or evolutionary processes are in place to successfully monitor and prevent AI evolution from going off the rails.

The real danger may come from those who would corrupt an otherwise harmless AI evolution process, with what would then be the equivalent of computer viruses or other cyber attacks. I must admit, I haven’t spent much time thinking about this, but presumably, at worse, it would be an arms race between legitimate developers and hackers, as it is today.

Rob

Mar 15 2017 at 11:42am

Thomas Sewell,

The Founders tried the white list approach with Article 1, Section 8. You see how well that worked out.

mbka

Mar 15 2017 at 12:05pm

Scott,

I have thought about this when I wrote my comment and I have some sympathy for the line of thought. In general, one should ask the sociologists, politicians, sci fi authors (seriously!), and psychologists, not the engineers. Engineers are too close to the present day technicalities, not the systemic consequences.

But, what I see in these AI conferences is a rather different crowd: technology entrepreneurs or ex-engineers, now “celebrity” technologists (Elon Musk, Bill Gates etc.). And this makes me take the whole discussion with a grain of salt.

Edan Maor,

This goes a lot deeper than AI. It’s a problem with current human-led optimization too. The problem isn’t usually how to achieve a goal. It’s which goal to choose to begin with. In Cambodia under the Khmer Rouge, regional commanders were told to just “get rid” of some excess population that the general leadership deemed the region did not “need”. The subsequent “optimization process” was awful enough w/o any help by AI.

The first mistake in optimization is to assume that only one single-point optimum exists. Usually this is massively untrue for natural systems. The second mistake is to assume that everyone, or at least a majority, agrees on the goal(s) to be optimized. As per Condorcet all the way down to Ken Arrow we know we can’t aggregate preferences. And for sure you can’t get an ought from an is. So, we have all these issues already. AI is just going to be able to reach the wrong answers faster.

Mark Bahner

Mar 15 2017 at 12:21pm

Hi Maniel,

You write:

I think this accurately assesses the state of current AI, but I don’t think it’s necessarily an accurate assessment of the future of AI. I think that as hardware becomes more and more powerful, and software becomes less about hard code, computers will do more and more unexpected things.

For example, when IBM Watson was playing Jeopardy, and asked for a rhyming boxing term for a hit below the belt, it didn’t respond, “What is a low blow?” It responded, “What is a wang bang?” My understanding is that the IBM people have found no instance of a boxing hit below the belt being called a “wang bang.” Watson essentially “made it up.” I think that as hardware becomes more powerful, and software becomes more of the machine-learning type rather than hard coding type, it will be increasingly difficult to attribute machine actions to the humans behind the machines. So I think Prof Caplan’s confidence won’t be valid…particularly in 1-3 decades, when machine intelligence will begin to vastly outstrip human intelligence.

Mm

Mar 15 2017 at 12:36pm

I would think that the people at Vox fear almost any intelligence especially if it is aligned with experience

Mark Bahner

Mar 15 2017 at 12:43pm

I think we’re at most a few decades from artificial general intelligence, and then at most a few years to artificial super-intelligence. So I think that anybody who thinks about what “may go on in a few hundred years” is dreaming.

I think even people thinking about what might be going on in 50 years are like people in the year 1800 thinking what might be going on in the year 2000.

Silas Barta

Mar 15 2017 at 1:55pm

@Scott_Sumner: I wasn’t disputing the Many Worlds interpretation (though Aaronson is skeptical); for my part, I actually think it’s the most plausible for much the reasons Yudkowsky gives (“it’s just the Schrödinger equation, assuming it’s valid everywhere”).

I was disputing the claim that quantum computing yields access to exponential storage or computing resources on arbitrary problems via its interaction with parallel universes, as claimed in the excerpt. That doesn’t follow from MWI!

Hazel Meade

Mar 15 2017 at 2:25pm

@Mark Bahner

Suppose IBM Watson were to become conscious.

I will now state with 100% confidence that that will never happen. The technical principles upon which IBM Watson is based are incompatible with consciousness developing.

To get into why would require a rather long-winded explanation of the philosophical and technical problems in “AI” as it exists today. But a loose explanation would be that consciousness is analog and embodied, IBM Watson is neither.

Hazel Meade

Mar 15 2017 at 3:00pm

A couple of other observations.

@RPLong

There is no reason at present to believe that artificial intelligence will ever develop hunger pangs or sex drives that drive animal behavior.

This is spot on. The thing is that an AI has no body and no means of interacting with the world and receiving feedback, and moreover it’s survival does not depend upon what it does in the world. It’s survival depends upon whether human beings pull the electrical plug or not.

People make a lot out of brain emulations, but real brains are designed for and evolved to control a body that does all sorts of things like walking around looking for food. A brain emulation divorced from a body is not going to develop normally. It would be like taking a baby and putting it in an isolation tank and expecting it to grow up like a normal adult human. Plus there’s really a lot of limitations of human brains, so emulating one isn’t even necessarily going to produce a super-intelligence. There’s a difference between being able to arrive at the same biased conclusion faster, and being smarter than a human.

There’s an anecdote about a sea creature called a tunicate, which swims around in the first part of it’s life, then attaches itself to a coral reef, and then digests it’s own nervous system. It eats it’s own brain. Because it doesn’t need it anymore. What do you need a brain for if you don’t need to navigate, right? The whole process of consciousness evolved from it’s earliest beginnings in the analog world of constant continuous-time interaction and feedback with the environment for the sake of survival. All of our mental processes are dependent on constant streams of sensory data from a body which we have to feed and keep alive to keep those processes going. Moreover from a body which is itself evolved to provide relevant sensory data that pertains specifically to the survival problem: hunger, thirst, sex-drive, pain.

Plus there’s a real question as to whether digital architectures are fundamentally capable of capturing the relevant features of real neurons, which are analog systems whose processes depend on all sorts of things like hormones and local, time-varying electrical fields.

So what does a computer have? It has massive amounts of digital data, exclusively digital processing of that data, a relatively small amount of real-world sensory data, and almost no physical mechanisms with which to affect the real world.

So you see the difference? A modern computer is NOTHING like a human consciousness. it’s so far from being anything like a human consciousness that it’s almost unfathomable.

Joe

Mar 15 2017 at 3:59pm

Scott, my argument would be that ‘risk’ is just the wrong framing. Yes, AI is eventually going to change everything: humans in their current form just aren’t remotely the most powerful mind designs possible, so unless something wipes us out then control will pass to more powerful entities once they can exist. That almost by definition means Artificial Intelligence (plus we can just see lots of specific advantages robots/software will have). So yes, it looks overwhelmingly likely that machines will ultimately take over, and that the most competitive ones will dominate. (See Robin Hanson’s book for one way this may play out in the short term.)

But this is not obviously a terrible outcome. It might be wonderful. The assumption that it’s the former usually seems to be based on the belief that AI will be a single homogeneous Optimising Algorithm that clearly won’t have any of our quirks, heuristics, mind features we care about – won’t ever be happy, sad, playful, thoughtful. But this is not remotely obvious, and if it’s wrong – if in fact AIs will have all of the messiness and complexity of our minds, will be sentient creatures like us – then a universe full of them could represent a wonderful future filled with utility.

Actually the AI-risk folks don’t usually expect future AI to be numerous and competitive like this. They expect there will be a single AI in charge of everything, that will control the universe and fill it with whatever it was programmed to maximise. But this again is far more plausible if AI is simple and homogeneous than if it’s complex and messy: a simple AI could more easily be created in a single short project by a small team, and could much more feasibly ‘bootstrap’ itself by recursively spending all its efforts improving its own mind design. Complex AI would probably be more widely distributed and be developed much more gradually, which would much more likely lead to ‘multipolar’ scenarios where many competing AIs coexist. Again — this outcome might be awful if it amounts to trillions of mindless algorithms consuming everything and then ending up locked in stalemate; or it might be wonderful, if it amounts to a vast civilisation comprised of countless sentient beings.

I agree with you that with the AI question there is a lot of uncertainty, but it doesn’t seem to be unidirectional, like the threat of nuclear war, a possible very bad thing that might or might not happen. It seems more like we don’t just not know what will happen, we don’t know whether each scenario would be extremely good or extremely bad. And so the immediate focus ought to be on finding strong answers to some of the supporting questions that will tell us whether Option A is fantastic and Option B is hellish, or the reverse. Only then should we start picking which option we actually want and trying to push towards it.

bobroberts17e1

Mar 15 2017 at 5:33pm

I want to echo what Hazel Meade commented. It doesn’t matter how nefarious or intelligent an AI is, if it can’t affect the physical world around it, it isn’t going to wreak any havoc.

How do computers obtain access to the physical world? By humans that hook them up in some kind of system. That system may be a car on a road, a robot in a factory or a quadcopter flying overhead.

In each of those scenarios, system safety is a huge consideration. We put guard rails on roads to prevent cars from hurting pedestrians or their passengers. There are concrete poles in front of the Wal-Mart to prevent cars from driving through the entry. We put robot arms in enclosures so they can’t injure factory personnel. We put limit switches to restrict their motion. There are software limits on the flight controllers of quadcopters to prevent them from flying into restricted airspace and we put covers over their blades to prevent them from injuring people if they get too close.

For AI to become a dangerous you’re going to need 1) an AI controlling a device or robot that is capable of injuring people, 2) an AI that determines that for whatever reason it wants to injure people and 3) a system so poorly designed that the AI can accomplish this despite safety features designed to keep it from doing so.

Keep in mind that system safety isn’t just physical (keeping a robot arm in an enclosure), it’s also in the software/hardware design. You can design a car to not exceed a maximum speed and separate the portion of the hardware/software that controls speed from the AI so that it can only operate within certain bounds. Even if the AI wants to do something violent, it is restricted by other components from doing so.

I think a more likely possibility is a nefarious human hacking into houses and cars connected to the internet and wreaking havoc than an AI trying to kill a bunch of people. Humans are control freaks. If and when people are smart enough to create AI, then I’m certain those same people will also be smart enough to design it to be controllable and safe within their design parameters.

Mark Bahner

Mar 15 2017 at 5:39pm

@Hazel Meade

I wrote:

You replied:

Perhaps I didn’t word my comments to you very well. You wrote that the first conscious computers would be like “retarded babies.” I think that’s clearly wrong. A baby is born ignorant. It doesn’t know English. It’s never read a book. It certainly has never read every single article in Wikipedia. But any conscious computer in the future is going to have complete command of the English language and will be able to instantaneously access the Internet to download and read anything that’s on the Internet.

So my statement regarding Watson was simply meant to show that no computer that becomes conscious would be ignorant like a baby is ignorant. In fact, a computer that becomes conscious would likely have more knowledge than any human on earth. (If a computer can become conscious. I don’t really have an opinion whether one can.)

Mark Bahner

Mar 15 2017 at 5:54pm

You’re looking at much too narrow a definition of AI. Baxter the industrial robot is AI. It has a body. It interacts with the world and receives feedback.

And Tesla Autopilot is AI. It interacts with the world. It receives feedback. And it’s survival can indeed depend on what it does in the world. When a Tesla on Autopilot crashed into a tractor trailer in Florida (as its driver was allegedly watching a Harry Potter movie rather than the road) the Tesla Autopilot did not “survive” the crash.

Scott Sumner

Mar 16 2017 at 9:34am

Mike, I don’t have any specific fear, because I don’t know enough about the technology. Rather it’s a general fear of new and potentially very powerful technologies. Humans are incredibly lucky that nukes are difficult to make. We may not be so lucky with the next technology.

And as I said above, I’m more worried about biotech than AI. (Partly because I know more about biotech.)

Silas, I defer to your greater expertise on quantum computing.

Joe. I’m not afraid of AI “taking over”.

bobroberts, You said:

“For AI to become a dangerous you’re going to need 1) an AI controlling a device or robot that is capable of injuring people, 2) an AI that determines that for whatever reason it wants to injure people and 3) a system so poorly designed that the AI can accomplish this despite safety features designed to keep it from doing so.”

You are one of a number of commenters who only seems to have read the title of my post. I recommend that you read the post itself. AI could be programmed to hurt people, it doesn’t need to decide that itself. My post is not about the sci-fi scenario where AI goes rogue.

Hazel Meade

Mar 16 2017 at 12:28pm

@Mark Bahner,

I think you’re missing my point. I don’t think it’s possible for anything resembling a modern computer to become conscious. The only conscious entities we know of are animals, mostly mammals. They all have brains which develop from childhood in a stimulative sensory environment beginning at a pre-natal pre-conscious stage. The closest we can get is with a brain emulation, but real brains don’t just pop out of the womb fully developed. In order to produce consciousness the brain emulation would have to be allowed to developmentally unfold in the same way as a human brain – it would have to be trained as a baby brain and allowed to develop like a baby’s brain in constant sensory feedback with the world. Thus the first conscious computer is likely to be some sort of brain emulation which will be like a human baby’s brain. It probably will also by dysfunctional because were probably not going to capture everything important in the brain emulation on the first try.

Todd Kreider

Mar 16 2017 at 5:28pm

“Stephen Hawking, Elon Musk, Bill Gates, Nick Bostrom and all the other experts…”

As I expeted with 45 comments, at least a couple pointed out these are not A.I. experts, and I’d say it stronger by saying they are not remotely A.I. experets.

Nick Bostrom is a philosopher and popularizer of A.I related ideas that have been batted around for many decades.

Joe W

Mar 16 2017 at 6:34pm

Scott, if your reason for starting to worry about AI is that Hawking, Musk, Gates, and Bostrom have voiced concerns about it, you should know that the ‘AI takes over’ scenario is indeed what they are afraid of.

Hawking: “It would take off on its own, and re-design itself at an ever increasing rate … Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.”

Musk: “Imagine that you were very confident that we were going to be visited by super intelligent aliens in let’s say ten years or 20 years at the most … Digital super-intelligence will be like an alien … Deep artificial intelligence — or what is sometimes called artificial general intelligence, where you have A.I. that is much smarter than the smartest human on Earth — I think that is a dangerous situation.”

Gates: “First the machines will do a lot of jobs for us and not be super intelligent. That should be positive if we manage it well. A few decades after that though the intelligence is strong enough to be a concern. I agree with Elon Musk and some others on this and don’t understand why some people are not concerned.”

From the Wikipedia page on Bostrom’s book Superintelligence: “Superintelligence: Paths, Dangers, Strategies is a 2014 book by the Swedish philosopher Nick Bostrom from the University of Oxford. It argues that if machine brains surpass human brains in general intelligence, then this new superintelligence could replace humans as the dominant lifeform on Earth.”

If you think this idea is nuts and not even worth consideration, then you shouldn’t put any weight into these peoples’ concerns.

Mark Bahner

Mar 16 2017 at 7:19pm

If you think the idea is nuts and not even worth consideration, you should do a little more reading. And then do a lot more reading.

The fundamental unquestionable truth behind artificial intelligence is that anything that increases exponentially with a doubling time on the order of a year or two eventually produces world-changing events. The total number of calculations per second produced by all the world’s microprocessors is such a phenomenon. The total number of calculations per second produced by all the world’s microprocessors is increasing by 86 percent per year. In other words, doubling in just a little over 1 year.

So…if the total number of calculations per second added by all the microprocessors in the world was equal to only one human brain in 1993, and the calculations per second are increasing by 86 percent per year, when will the calculations per second of all microprocessors equal one trillion human beings?

Show your work. 🙂

Todd Kreider

Mar 16 2017 at 11:04pm

I sure don’t think the concern over A.I. is nuts but nor do I think it is a near inevitable calamity.

I have disagreed with part of Mark’s idea above since the 80s: Just because computer power is incredible by 2035 or 2050 compared with today, which I expect it to be, doesn’t mean strong A.I. is a necessary outcome. But the chance that incredible computer power in doesn’t significantly change the world in several ways seems remote.

Mark Bahner

Mar 16 2017 at 11:13pm

Elon Musk runs a company that has a fleet of cars with Autopilot that drive 1 million miles every 10 hours…880 million miles per year. He has a front-row seat to how rapidly machines can improve when they’re collecting and analyzing such massive amounts of data.

Mark Bahner

Mar 18 2017 at 10:46am

C’mon, people! Try to get into the spirit of this! 🙂

If something increases by 86 percent per year, it doubles every 14 months. One trillion is approximately 2^40 (two raised to the fortieth power…or 40 doublings). 40 x 14 months = 560 months = about 47 years. So all computers in the world in 1993 performed the same number of calculations per second as one human brain, the year when computers would perform the same number of calculations per second as 1 trillion human beings would be 1993 + 47 = 2040…23 years from now.

P.S. And it hits 1 quadrillion human brain equivalents only 140 months later…in 2052, 35 years from now.

Roger McKinney

Mar 18 2017 at 7:18pm

indicate why your argument is not convincing to people much better informed than me.

I used to work as the product expert for the best neural networks program in the world, made by StatSoft of Tulsa, OK. NN is the heart of AI. Believe me there is nothing to be afraid of.

AI has always been overhyped. Econ talk had a good episode on it last year that debunked the fear. Why do “experts” have so much fear? It could be irrational or simply promotional. A whole lot of it is their socialist bent. Just as with global climate change, socialist experts are always looking for way to frighten people into having the state take control of everything.

Hayek had the best argument against fear of AI. He wrote that humans will never understand the brain because for one entity to understand another the understanding entity must be more complex. We cannot become more complex than ourselves. So it makes sense than humans cannot design an entity that is more complex than us.

Todd Kreider

Mar 19 2017 at 11:36am

Roger McKinny wrote:

This is what the guest of that episode, physicist Richard Jones said at the end about A.I.:

Here is also one particularly surprising quote from the physicist, who was on that podcast:

Moore’s Law is not a social construct and is coming to an end by around 2022 but there is no sign of an end (yet?) to the general acceleration in computer power that as Kurzweil points out began decades before Moore’s Law with swtches and vaccum tubes.

With respect to creating a complex system, I’d say that humans have developed a world economy that is more complex than the human brain, although I can’t prove it.

Mark Bahner wrote:

Right, if the trend holds, which we don’t know out to 2052 or 2042 or even to 2032, but I have thought at least to 2030.

Mark Bahner

Mar 19 2017 at 10:03pm

It’s very hard for me to believe that an economist who was born in 1899 and died almost exactly 25 years ago could have some fundamental insight on AI. In 1992, I was 2 years away from buying a laptop computer with a 400 meg hard drive (or was it a 120 meg hard drive?) for $2000.

This seems more of a religious argument than an argument from science. And I don’t even see it as relevant. There is no need to understand or duplicate a human brain in order to design something vastly superior to it. For example, IBM Watson reads 800 million pages per second. In contrast, the human record is 25,000 words per minute.

Mark Bahner

Mar 21 2017 at 12:19pm

I wrote:

Todd Kreider responds:

‘

OK, let’s say the current trend of growing 86% per year only holds until 2030. Let’s also say the human brain is 20 petaflops, which is Ray Kurzweil’s estimate, and is generally in the middle of a range of estimates that vary by a maybe a factor of 100 above and below his estimate. If the human brain is 20 petaflops, we’d have ~10 billion human brains worth of calculations per second in 2030.

What would happen to the trend of calculations per second of world microprocessors? To me, the most likely possibility would be that the trend would be maintained or even increase in speed (i.e., more than 86% per year) because eventually microprocessors could play a bigger and bigger role in the design of improved microprocessors. For example, right now, there are ~7.5 billion humans, and the computer calculations per second add up to only about 70 million more people. But if in 2030 there are about 8 billion humans, and the computer calculations add 10 billion more equivalent human brains, a lot more human time and computer power can be directed towards increasing the number of calculations per second performed by computers. So it’s like a positive feedback loop (as opposed to a negative feedback loop).

Gilbert

Mar 24 2017 at 5:39am

There is nothing to be worried about.

First, we humans produce children. This is how we keep our species going, generation after generation.

Giving birth to an AI is the same as giving birth to a child. You love it, raise it and transmit values you think are valuable to keep in next generations.

Doing a next generation with “meat” and reproduction, and doing it thru technology is the same : creating a following generation.

I do believe that biologic life is a step in evolution, and just a step. Every life in the Universe that starts as a biological entity, if it develops intelligence and technology, will reach a level (like we are close to) where you can produce a “next generation” not made from biology/meat/reproduction but technology and artificial intelligence (it’s not artificial but non-biology based rather).

My belief is that any civilization advanced enough will move from the biologic step to the machine step because if gives you immortality and allows you to travel in the Universe. Once immportal, it does not matter anymore how long it takes to reach other stars or other systems : all that matters to you is energy.

Technology can be so advanced that those “intelligent machines” are as advanced if not more as our current meat bodies (which are biological machines anyway). They could even be able to move at leisure between meat bodies and machine bodies, and use the machine bodies to move around in the Universe, and move back to meat at destination if it fits their needs or wishes.

We will create an AI. Like we create children thru reproduction because our lives are limited in time. This AI will be able to produce following generations faster than we do because it won’t need 15 or 10 years to become a working adult like our children. Its intelligence will grow exponentially, reach our level, and go beyond it.

This AI will make scientific discoveries and give us the secrets of the universe, and space travel. Advanced enough, this AI will let us leave the biological bodies for advanced machine ones so we can travel the stars and the universe. You will be able to “exist” in copies of yourself so can have 10 copies of you going in 10 different directions and merge back when they meet again and share knowledge, experience.

Biology and meat bodies is just a step for civilizations. If c is the absolute speed limit, then immortality through technology is the key to the Universe itself and exponential intelligence grow.

It’s not different than having children. When you reproduce you create another generation made from meat and transmit genes and knowledge. AI and technology will not use meat, but it’s the same process.

You need to go higher and see the big picture itself. This is the future. If we meet another civlization, it will be intelligent machines. So advanced they might have kept the “look” of their previous biological bodies, but immortal so they can travel the stars and the Universe itself.

Mel Pama

Mar 24 2017 at 3:37pm

Remember “Vyger” in the first star trek movie. It stood for “Voyager”, one of our satellites sent into space that returns looking for it’s creator. Well it is not to far away that that would be possible. So all you carbon infestations of the planet must beware of the threat to all of us. The road of good intent is littered with the bodies of the dead.

Comments are closed.