Economic models of cooperation and conflict are often based on the Prisoner’s Dilemma (PD) of game theory. As simple as this model is, it helps us understand whether or not a war will be fought, where “fought” includes escalation steps through retaliation—the current situation between the government of Israël and the government of Iran.

Assume two countries each governed by its respective ruler, S and R. (In the simplest model, it may not matter who the ruler is and whether it is an individual or a group.) Each ruler faces the alternative of fighting with the other or not. By definition of a PD, each ruler prefers no war, that is, no mutual fighting; let’s give each ruler a utility index of 2 and 3 for a situation of fighting and not fighting respectively. A higher utility number represents a more preferred situation (a situation with higher “utility”). Each ruler, however, would still prefer to fight if he is the only one to do it and the other chickens out; this means a utility number of 4 for that situation, the top preferred option for each of them. The worst alternative from each player’s perspective is to be the “sucker,” the pacifist who ends up being defeated; the utility index is thus 1 for the non-fighter in this situation.

No cardinal significance must be attached to these utility numbers: they only represent the rankings of different situations. Rank 4 only means the most preferred situation, and 1 the least preferred, with 3 and 2 in between. A situation more preferred can simply be less miserable, with a smaller net loss.

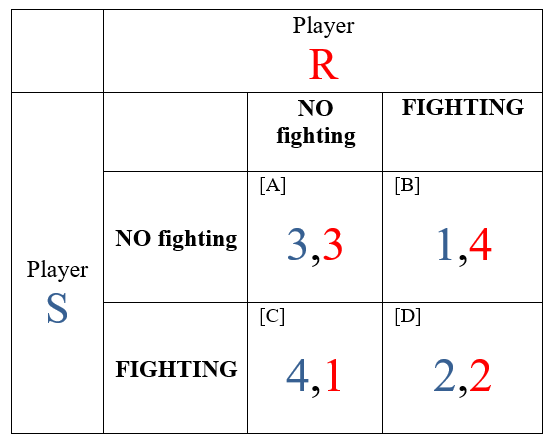

This setup is represented by the PD payoff matrix below. For our two players, we have four possible combinations or situations of “FIGHTING” or “NO fighting”; each cell, marked A to D, represents one of these combinations. The “payoffs” could be sums of money; here, they are our utility rankings, which we assume to be the same for the two players. The first number in a cell gives the rank of that situation for S (the line player, blue in my chart) given the corresponding (column) choice by R. The second number in the cell gives the rank of that situation for R (the column player, red in my chart) given the corresponding (row) choice of S. For example, Cell B tells us that if S does not fight but R does, the latter gets his most preferred situation while S is the sucker and gets his worst possible result (being defeated or severely handicapped). In Cell C, S and R switch places as the sucker (R) and the most satisfied (S). The player who exploits the sucker is called a “free rider”: the bellicist gets a free ride to the detriment of the pacifist. Both S and R would prefer to land in Cell A than in Cell D, but the logic of a PD pushes them into the latter.

The reason is easy to see. Consider S’s choices. If R should decide to fight, S should do the same (Cell D), lest he be the sucker and get a utility of 1 instead of 2. But if R decides not to fight, S should fight anyway because he would then get a utility of 4 instead of 3. Whatever R will do, it is in the interest of S to fight; it is his “dominant strategy.” And R makes the same reasoning for himself. So both will fight and the system will end up in Cell D. (On the PD, I provide some short complementary explanation in my review of Anthony de Jasay’s Social Contract, Free Ride in the Spring issue of Regulation.)

This simple model explains many real-world events. Once a ruler views his interaction with another as a PD game, he has an incentive to fight (attack or retaliate). The ruled don’t necessarily all have the same interest, but nationalist propaganda may lead them to a contrary belief. One way to prevent war is to change some payoffs in the ruler’s matrix so as to tweak his incentives. For example, if S or R realizes that, given the wealth he may lose or the other’s military capabilities—if war threatens his own power, for example—war would be too costly. The preference indices will change in the matrix; try 4,4 in cell A and 3,3 in cell D, with 2,1 and 1,2 in the other diagonal. New incentives will have eliminated the PD nature of the game.

Another way to stop the automatic drift into Cell D is for the two players to realize that, instead of a one-shot game, they are engaged in repeated interactions in which cooperation—notably through trade—will make Cell A more profitable than a free ride over several rounds. However, this path is likely to be inaccessible if S or R are autocratic rulers, who don’t personally benefit from trade and individual liberty as much as ordinary people. The possibility of transforming a PD conflictual game into a repeated cooperative game was brilliantly explained by political scientist Robert Axelrod in his 1984 book The Evolution of Cooperation (Basic Books, 1984).

Like all models, this one hides some complexities of the world. It does not explicitly incorporate deterrence, which is essential for preventing war as soon as one of the players views the game as a PD. But when deterrence has not worked—one did attack—the question is whether a counter-attack, and which sort, will have a better deterrent effect or will just be another step in mutual retaliation, that is, open war.

In the current Middle East situation, religion on the Iranian rulers’ side makes matters worse by countering rational considerations of military potential. Preferences are thus likely to differ from a repeated PD game. “When you shot arrows at the enemies, you did not shoot; rather God did,” goes a saying among Iranian radical zealots (quoted in “Iranians Fear Their Brittle Regime Will Drag Them Into War,” The Economist, April 15, 2024). You cannot (always) lose with God on your side.

God or Allah shooting the arrow, by Pierre Lemieux and DALL-E

READER COMMENTS

Ahmed Fares

Apr 17 2024 at 4:55pm

re: “Seem to slay…”

Before the Battle of Badr in which the Muslims were victorious, the Prophet Muhammad threw a handful of stones against the enemy. I mention this to understand the following Qur’anic verse, revealed after the battle, which establishes that Muslims believe that only God causes death:

And you did not kill them, but it was Allah who killed them. And you threw not, [O Muhammad], when you threw, but it was Allah who threw that He might test the believers with a good test. Indeed, Allah is Hearing and Knowing. —Qur’an 8:17

So we have that belief of 1.8 billion Muslims. Now let’s do Hinduism, which adds another 1.2 billion people, give or take.

The Bhagavad Gita is a story about Arjuna, a warrior who is depressed about the thought of causing death. Lord Krishna incarnates in the form of a man and explains the reality of things.

“I am come as Time, the waster of the peoples,

Ready for that hour that ripens to their ruin.

All these hosts must die; strike, stay your hand—no matter.

Therefore, strike. Win kingdom, wealth, and glory.

Arjuna, arise, O ambidextrous bowman.

Seem to slay. By me these men are slain already.

You but smite the dead.”

So according to Hinduism, the 3,000 people who died on 9/11 were already dead when the planes hit the buildings.

Pierre Lemieux

Apr 17 2024 at 6:09pm

Thanks, Ahmed, for these scholarly quotes. I know that they are related to deep theological questions, but should we suppose that the Iranian government’s missiles were already built before the Greeks asked rational questions about the world, before Western science was born, and before game theory was invented?

Atanu Dey

Apr 18 2024 at 4:55am

Mr Fares: Do you have a source for the quoted bit from the Bhagavad Gita? Reason I ask is that the translation appears to be rather sloppy.

Also, write, “So according to Hinduism, the 3,000 people who died on 9/11 were already dead when the planes hit the buildings.”

No, that’s not in accord with Hinduism. This is not an appropriate forum for discussing that matter.

Ahmed Fares

Apr 18 2024 at 2:51pm

Here’s another translation with verse number so you can check for yourself:

Therefore, arise and attain honor! Conquer your foes and enjoy prosperous rulership. These warriors stand already slain by Me, and you will only be an instrument of My work, O expert archer. —Bhagavad Gita: Chapter 11, Verse 33

Bhagavad Gita: Chapter 11, Verse 33

This website has five different translations:

English Translation By Swami Adidevananda

11.33 Therefore airse, win glory. Conering your foes, enjoy a prosperous kingdom. By Me they have been slain already. You be merely an instrument, O Arjuna, you great bowman!

English Translation By Swami Gambirananda

11.33 Therefore you rise up, (and) gain fame; and defeating the enemies, enjoy a prosperous kingdom. These have been killed verily by Me even earlier; be you merely an instrument, O Savyasacin (Arjuna).

English Translation By By Dr. S. Sankaranarayan

11.33. Therefore, stand up, win glory, and vanishing foes, enjoy the rich kingdom; these [foes] have already been killed by Myself; [hence] be a mere token cause [in their destruction], O ambidextrous archer !

English Translation of Abhinavgupta’s Sanskrit Commentary By Dr. S. Sankaranarayan

11.33 See comment under 11.34.

English Translation of Ramanuja’s Sanskrit Commentary By Swami Adidevananda

11.33 Therefore, arise for fighting against them. Conering your enemies, win glory and enjoy a prosperous and righteous kingdom. All those who have sinned have been already annihilated by Me. Be you merely an instrument (Nimitta) of Mine in destroying them – just like a weapon in my hand, O Savyasacin! The root ‘Sac’ means ‘fastening’. A ‘savyasacin’ is one who is capable of fixing or fastening the arrow even with his left hand. The meaning is that he is so dexterous that he can fight with a bow in each hand.

source

That’s why I didn’t respond. Pierre brings religion into the discussion and I respond in a limited manner because I know this is an economics forum.

David Seltzer

Apr 17 2024 at 6:30pm

Per cell D,D. Iranian Supreme Leader Khamenei threatened an attack on Israel, but signaled it would be limited. Israel’s response was defensive and it ended with little damage. The tacit rules of engagement, I suspect, were to limit this to a bar fight where the casualties and costs were minimized. Post the attack, stern warnings were issued by Khamenei and Netanyahu. The range of conflict in cell D,D extends from a skirmish to nuclear launch. Maybe utility in D,D ranges between 2,2 and 3,3.

Pierre Lemieux

Apr 17 2024 at 7:22pm

David: Remember that 1(st),2(nd),3(rd),4(th) are just rankings of preferences, so (1) fractions don’t change the rankings, and (2) if they change in a cell, they have to change in at least one other cell. Still, I see your point. The problem is, from the point of view of the player who has been attacked, a problem of deterrence: Would a non-response increase the probability of future attacks–a future war–even if it reduces the probability of an immediate war, and what would be the worst? (I am just pointing out how to set the problem once future rounds and deterrence are explicitly introduced in the game.)

David Seltzer

Apr 17 2024 at 8:00pm

Thank you Pierre. I should have considered future events more explicitly. Probabilities are are not static. Therein lies the problem as they change at the margin with each actor’s change in behavior. Guessing an opponent’s actions in war is a game played with imperfect information. One method is Monte Carlo distribution simulations that are used to develop strategies for each possibility.

Craig

Apr 18 2024 at 8:46am

“Guessing an opponent’s actions in war is a game played with imperfect information”

Indeed, Mr. Seltzer and I’d suggest a game where the benefits are chronically overestimated and the costs grossly underestimated.

Jim Glass

Apr 17 2024 at 9:27pm

The National World War I Museum held a session about the fact that ending wars is much more difficult than starting them, yet much less studied. As to “Why didn’t peace break out in 1915?” — when it was obvious to all that the war was going to by *hugely* more costly than any had believed, a *massive* lose-lose for all, and thus irrational to continue — the speaker gives an interesting ‘game theory’ take. Each year with his students he holds a real-money auction in which he pays $1 to the high bidder, and regularly collects as much as $65 for it. Incentives!

Interesting observations about the incentives and behaviors that directed the great expansion and intensifying of the war as well. Remember, at the start it was all about next to nothing.

In this process, the nature of political regimes matters — a lot. A lesson for right now.

Craig

Apr 17 2024 at 9:36pm

I’d also note the sunk cost fallacy often comes into play, ie we spent so much and lost so many, surely we have to achieve the outcome we want, we can’t let those men to have died in vain.

Jose Pablo

Apr 17 2024 at 10:10pm

I understand it is a very simplified model. But even for a simplified model, I find a couple of things striking:

* All the cells are “end-games” but [D]. [D] is “transitory”, the end game of [D] is either R losing the fight and S winning [DS] or S losing and R winning [DR].

For the ruler of R [DS] is a worse outcome than not fighting. In [DS] R is worse than a sucker, it is a “devastated sucker”. Rulers should be aware (if they are capable of reading history, some of them are not) of how prone they are to overestimate the capabilities of their armies (think of Vietnam or Afghanistan) and so, avoid [D] at any cost.

* I don’t see how the military occupation of a foreign country can have a utility of 4. I don’t think that the English would agree when they occupied Ireland. Even the Israelis abandoned the occupation of Gaza because it was a nightmare (it didn’t seem like a 4 back then).

Roger McKinney

Apr 18 2024 at 11:29am

Great points! Most countries enter war much too optimistic about success. Iran wants to wait until it has nuclear weapons to counter Israel’s. Also, it knows it has an immediate advantage in numbers of missiles. Its economy is weaker, so without nuclear weapons it must quickly overwhelm Israel’s defenses.

Israel knows it can’t wait for Iran to go nuclear, so as in 73, it must make a preemptive strike.

Who knows which side will win, but Israel’s defeat means the end if Israel as a nation. Iran’s defeat means only hardship fir awhile because Israel can’t occupy Iran.

Jose Pablo

Apr 18 2024 at 6:33pm

Defeated countries aren’t occupied, at least not for the last 150 years. As far as I can recall from the top of my mind, the American occupation of the Mexican lands of Texas and California, and the Chilean occupation of a (limited) part of Colombia and Peru, were the last successful occupations of enemy territory.

The US doesn’t rule Iraq (much less Afghanistan), the Russians don’t rule Afghanistan, and Germany is still ruled by the Germans and Japan by the Japanese. The Italians always ruled Italy. Gaza, which has been defeated numerous times since 1948, wasn’t ruled by the Israelis before October 7th.

The “invading” armies are a myth of pre-Napoleonic times when wars were waged by a class of warriors very limited in number that tended to remain in the invaded territories in place of the old caste. In any case, the new masters still needed the “invaded” serfs well and alive to extract rents from them (it is very difficult to extract rents from a dead farmer).

Occupying defeated enemy territory is just exhausting. It is not going to happen. Nowadays, when a ruler is defeated by a foreign army, the only thing that happens is that a new domestic ruler, sympathetic to the cause of the invaders, is put in place of the old one.

The CIA in the 50s-70s mastered how to do precisely that without a war: Iran (1953), Guatemala (1954), Chile (1971). The best the Israelis can do is learn from that.

Mactoul

Apr 19 2024 at 9:13pm

Plenty of occupations you aren’t recalling– Tibet by Communist China, East Prussia by Russia, Central Asia is still quite Russified considering that it became Russian only in mid-19C.

All nations possess their territories by their brute might or in another word, their ongoing occupation.

Pierre Lemieux

Apr 18 2024 at 11:41am

Jose: As you said, it is a very simplified model. A few points seem to me of special importance. It is a PD, until you change the payoffs; then it may differ from a PD. (That the sucker’s payoff is higher than the cooperation payoff is of the essence of a PD.) Moreover, my application is only about fighting or not fighting. Once the players land in D (or in B or C), another “game within the game” can start, with its own matrix. Many rulers in history have believed, and many still believe, that winning a war is valuable (whether for looting, glory, or some other pleasures of ruling). Whether it is the same for the typical ordinary citizen is another matter: Adam Smith thought that even the American colonies entailed net costs. As Jim Glass mentioned, the nature of the political regime matters for the payoffs and the nature of the game. Last but not least, the problem of aggregation remains: What is the “public interest” or, for a group of rulers, their common interest, often called the “national interest”?

Jose Pablo

Apr 18 2024 at 8:11pm

Many rulers in history have believed, and many still believe, that winning a war is valuable

Yes, yes but the problem is that they don’t decide on “winning a war“, they decide “to fight a war” which can have a very different outcome.

Given the historical precedents, well-read rulers should know that their chances of overestimating the possibilities of winning a war they start are close to 1 (Putin as the last example). In this case, avoiding starting a war is the most sensible strategy. The risk-reward ratio is just terrible. Even for the ruler.

Pierre Lemieux

Apr 18 2024 at 9:37pm

Jose: This suggests that many rulers in a context of free speech and debate as well as a procedure for peacefully changing the rulers is much more efficient than to be subject to only one.

Jose Pablo

Apr 18 2024 at 10:52pm

Yes, Pierre, no doubt …

and yet, wait to see the level of the ideas discussed in the next debate between the two wannabee American rulers. I still remember the last one …

Not to talk about the “new standards” that have been established for “a procedure for peacefully changing the rulers” … what can I say?

Time to question if we really need rulers, debating and peacefully changing (no doubt the best kind) or otherwise.

Mactoul

Apr 17 2024 at 10:15pm

1) People say and quote these teachings but by their actions show that they don’t actually believe these percepts.

Gita teaches not to feel sorrow at death but religious Hindus mourn as anybody else.

2) These teachings may be actually errornous and products of an inadequate concept of God.

Ahmed Fares

Apr 17 2024 at 10:19pm

There has been a lot of debate about whether the attack by Iran was a success or a failure. All the military experts I follow, most of whom are American, so I don’t think there is a bias there, say that this was a huge success for Iran. That the Shahed-136 drones shot down in large numbers were like pawns in a chess game, meant to be sacrificed and use up defensive missiles in the same way Russian used them in Ukraine. Scott Ritter weighs in:

From another post by Scott Ritter:

Larry Johnson, a former CIA analyst, posted this:

Israel’s Air Defense Fails to Stop One Iranian Missile

Grand Rapids Mike

Apr 18 2024 at 1:00pm

Interesting comment that is not how it being played by the press. One thought after reading your comment is that most of the missiles shot off by Iran were then decoys to use up the counter defense, so the real target could be hit.

Also since Israel knows this, they know the attack was not a win for Israel, as the press is spinning it. So if Israel knows it’s not a win and the press spin is a hoax how is there response deployed. Does there response go along with the hoax or not? What’s the game theory answer?

Pierre Lemieux

Apr 18 2024 at 6:13pm

Mike: A simple PD game (like the one on my chart) explains how two rational rulers who do not want war can be led into it by rational calculations. As I pointed out by citing Axelrod, modeling dynamic interactions (in many rounds) can lead to a very different result. Yet, if you include the certain belief that one strategy leads to eternal life, it would become and remain the dominant strategy. Game theory and economic analysis provide ways to think about a problem (war in this case) at different levels of complexity.

Jose Pablo

Apr 18 2024 at 7:15pm

if you include the certain belief that one strategy leads to eternal life

The profoundly dystopian visions of rulers play a very significant role in wars: God calls to spread His word, the restoration of the Soviet glory, the supremacy of the Arian race…

To the point that it defies credulity that such a significant part of the ruled so enthusiastically risk their lives to follow such idiocies.

Pierre Lemieux

Apr 18 2024 at 9:33pm

Jose: Yes, and that opens a whole new field of inquiry. There must be a relation between how the ruled are ruled (how powerful the state is) and how they are willing to risk their lives to defend their way of life. It is a complex relation, though, certainly not linear. If we prudently accept that no really free society could resist the Russian or Chinese tyrant or even the North Korean or Iranian tyrant, we might explore practical ways in which the table could be turned. What about those who don’t want to fight having to pay higher taxes to pay the attractive salaries (plus family pensions in case of death or incapacitating injuries, etc.) necessary to attract as many volunteers as needed? Technological warfare does not require Napoleonic boot-on-the-ground armies anyway. (I put “practical” in scare quotes because it requires some optimism to imagine how people who don’t want markets to determine prices when a tornado strikes would accept that the market determines the remuneration of soldiers in a war situation.)

Jim Glass

Apr 18 2024 at 1:54pm

“Ritter … posted a tweet in April 2022 claiming that the National Police of Ukraine was responsible for the Bucha massacre and calling U.S. President Joe Biden a “war criminal” for “seeking to shift blame for the Bucha murders” … Ritter is a contributor to Russian government-owned media outlets RT and Sputnik. He compared Ukraine to a “rabid dog” that needed to be shot. He compared the treatment of Russians under Ukraine law to Nazi Germany’s treatment of Jews…” [Wiki]

No bias there! Nope. None at all.

Kevin Corcoran

Apr 18 2024 at 2:58pm

I’ve been aware of Scott Ritter since the mid 2000s. If there was ever anyone who embodies the old moniker of “useful idiot,” it would be him. As much as I try to be charitable when evaluating people’s arguments (for better or worse), as far as I’ve ever seen Ritter has never been able to rise above the level of ranting in his writing and his talks.

Jim Glass

Apr 18 2024 at 11:20pm

Scott might be deemed worse than that. I didn’t mention, because it didn’t seem relevant to Ukraine, that he is a prison alumnus convicted sex offender (“I never believed she was 15, like she said”) — but now that he is going across Russia making money bragging about it, it’s relevant. Some years ago the NY Times covered this part of his story in a larger profile.

Ahmed Fares

Apr 18 2024 at 3:10pm

Watch the following video. Note in what direction the people turn their heads before the missile strikes. Social media using Google Street View figured the missile came from a different direction that what the Ukrainians claimed. The New York Times issued a retraction. Yes, the Ukrainians lied.

CCTV captures moment Russian missile strikes market in Donetsk, Ukraine

Pierre Lemieux

Apr 18 2024 at 5:50pm

Ahmed: That’s a good test. Did Ritter ever issue a retractation?

Jose Pablo

Apr 18 2024 at 7:28pm

Ahmed, I am a little lost here.

If I get it right, you argue that because the Ukrainians blamed Russia* for a missile strike caused, during a Russian missile attack, by an errant Ukrainian air defense, it is plausible that the National Police of Ukraine was responsible for the Bucha massacre.

If this is the case, your argument makes no logical sense. Like at all.

* Note that from a moral standpoint, even if that particular strike was caused by a Ukrainian air defense missile, Russia is still to blame for that since that strike would have never happened in the absence of the Russian missile attack.

Ahmed Fares

Apr 18 2024 at 9:51pm

Ukraine has been feeding Western media a constant stream of lies from the beginning of the war starting with the Ghost of Kiev. The worst was by Ukraine’s human rights chief Lyudmila Denisova who the Ukrainians fired for making up false stories of Russians raping women and children. She said she did it to keep Western aid flowing. Anyway, on to Bucha. (Yes, this is from a Russian site but I have seen this on many other sites and forums but this happens to be the best explanation.)

MSM’s Bucha Tall Tale

The following site tells the stages of human decomposition. Compare it with the timeline in the Sputnik Globe article.

The Stages Of Human Decomposition

Check out the nails on this woman’s hands.

Bucha massacre victim identified by make-up artist who recognised her red nail polish

Jose Pablo

Apr 18 2024 at 10:58pm

Thank you, Ahmed. Now I feel better. I thought that the plausibility of all these stories came just from the false reports on the errant missile.

Now I see that there is a whole body of evidence.

Pierre Lemieux

Apr 18 2024 at 11:59pm

Ahmed: I, for one, would need better, more diversified, and (generally) more credible sources. Where are all these competing journalists chasing a Pulitzer prize?

Jim Glass

Apr 19 2024 at 12:26am

[Ahmed Fares wrote:]

Yes, this is from a Russian site but…

Yes, you do value Russian sites quoting each other. But if only for breadth of view, why not quote some United Nations sources? …

UN report details summary executions of civilians by Russian troops in northern Ukraine, maybe even read the full text pdf – 34 pages of factual learning.

and perhaps…

UN Commission of Inquiry on Ukraine finds continued systematic and widespread use of torture and indiscriminate attacks harming civilians

and…

Ukraine: Report documents mounting deaths, rights violations

Heck, the Independent International Commission of Inquiry on Ukraine has an entire index of reports, so much for your friends at Sputnik et. al. to earn their rubles explaining away.

Comments are closed.